# Audio Embedding with CLMR

*Author: [Jael Gu](https://github.com/jaelgu)*

## Description

The audio embedding operator converts an input audio into a dense vector which can be used to represent the audio clip's semantics.

Each vector represents for an audio clip with a fixed length of around 2s.

This operator is built on top of the original implementation of [CLMR](https://github.com/Spijkervet/CLMR).

The [default model weight](clmr_checkpoint_10000.pt) provided is pretrained on [Magnatagatune Dataset](https://paperswithcode.com/dataset/magnatagatune) with [SampleCNN](sample_cnn.py).

## Code Example

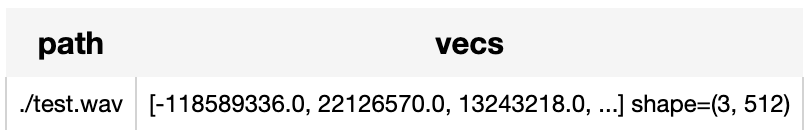

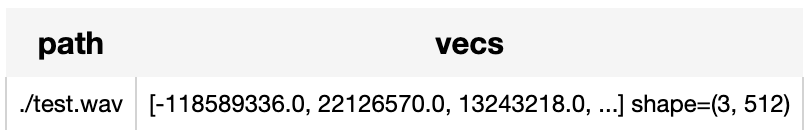

Generate embeddings for the audio "test.wav".

*Write a pipeline with explicit inputs/outputs name specifications:*

```python

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'frame', ops.audio_decode.ffmpeg())

.map('frame', 'vecs', ops.audio_embedding.clmr())

.output('path', 'vecs')

)

DataCollection(p('./test.wav')).show()

```

## Factory Constructor

Create the operator via the following factory method

***audio_embedding.clmr(framework="pytorch")***

**Parameters:**

*framework: str*

The framework of model implementation.

Default value is "pytorch" since the model is implemented in Pytorch.

## Interface

An audio embedding operator generates vectors in numpy.ndarray given towhee audio frames.

**Parameters:**

*data: List[towhee.types.audio_frame.AudioFrame]*

Input audio data is a list of towhee audio frames.

The input data should represent for an audio longer than 3s.

**Returns**:

*numpy.ndarray*

Audio embeddings in shape (num_clips, 512).

Each embedding stands for features of an audio clip with length of 2.7s.

# More Resources

- [How to Get the Right Vector Embeddings - Zilliz blog](https://zilliz.com/blog/how-to-get-the-right-vector-embeddings): A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- [Audio Retrieval Based on Milvus - Zilliz blog](https://zilliz.com/blog/audio-retrieval-based-on-milvus): Create an audio retrieval system using Milvus, an open-source vector database. Classify and analyze sound data in real time.

- [Vector Database Use Case: Audio Similarity Search - Zilliz](https://zilliz.com/vector-database-use-cases/audio-similarity-search): Building agile and reliable audio similarity search with Zilliz vector database (fully managed Milvus).

- [Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar](https://zilliz.com/event/sparse-and-dense-embeddings-webinar): Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- [Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar](https://zilliz.com/event/sparse-and-dense-embeddings-webinar/success): Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- [An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog](https://zilliz.com/learn/everything-you-should-know-about-vector-embeddings): In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.