copied

Readme

Files and versions

1.8 KiB

Audio Embedding with data2vec

author: David Wang

Description

This operator extracts features for audio with data2vec. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture.

Code Example

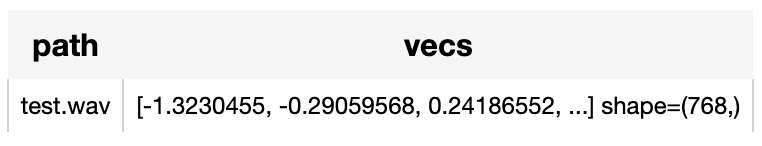

Generate embeddings for the audio "test.wav".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee.dc2 import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'frame', ops.audio_decode.ffmpeg(sample_rate=16000))

.map('frame', 'vecs', ops.audio_embedding.data2vec(model_name='facebook/data2vec-audio-base-960h'))

.output('path', 'vecs')

)

DataCollection(p('test.wav')).show()

Factory Constructor

Create the operator via the following factory method

data2vec(model_name='facebook/data2vec-audio-base')

Parameters:

model_name: str

The model name in string. The default value is "facebook/data2vec-audio-base-960h".

Supported model name:

- facebook/data2vec-audio-base-960h

- facebook/data2vec-audio-large-960h

- facebook/data2vec-audio-base

- facebook/data2vec-audio-base-100h

- facebook/data2vec-audio-base-10m

- facebook/data2vec-audio-large

- facebook/data2vec-audio-large-100h

- facebook/data2vec-audio-large-10m

Interface

An audio embedding operator generates vectors in numpy.ndarray given an audio file path or towhee audio frames.

Parameters:

data: List[towhee.types.audio_frame.AudioFrame]

Input audio data is a list of towhee audio frames. The input data should represent for an audio longer than 0.9s.

Returns: numpy.ndarray

The audio embedding extracted by model.

1.8 KiB

Audio Embedding with data2vec

author: David Wang

Description

This operator extracts features for audio with data2vec. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture.

Code Example

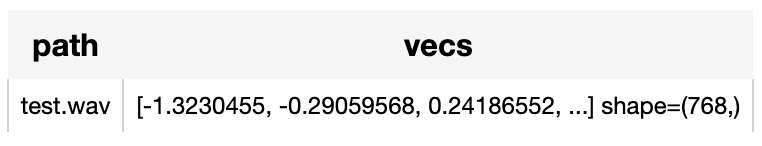

Generate embeddings for the audio "test.wav".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee.dc2 import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'frame', ops.audio_decode.ffmpeg(sample_rate=16000))

.map('frame', 'vecs', ops.audio_embedding.data2vec(model_name='facebook/data2vec-audio-base-960h'))

.output('path', 'vecs')

)

DataCollection(p('test.wav')).show()

Factory Constructor

Create the operator via the following factory method

data2vec(model_name='facebook/data2vec-audio-base')

Parameters:

model_name: str

The model name in string. The default value is "facebook/data2vec-audio-base-960h".

Supported model name:

- facebook/data2vec-audio-base-960h

- facebook/data2vec-audio-large-960h

- facebook/data2vec-audio-base

- facebook/data2vec-audio-base-100h

- facebook/data2vec-audio-base-10m

- facebook/data2vec-audio-large

- facebook/data2vec-audio-large-100h

- facebook/data2vec-audio-large-10m

Interface

An audio embedding operator generates vectors in numpy.ndarray given an audio file path or towhee audio frames.

Parameters:

data: List[towhee.types.audio_frame.AudioFrame]

Input audio data is a list of towhee audio frames. The input data should represent for an audio longer than 0.9s.

Returns: numpy.ndarray

The audio embedding extracted by model.