diff --git a/README.md b/README.md

index f72af4a..cfcbc6a 100644

--- a/README.md

+++ b/README.md

@@ -1,4 +1,4 @@

-# Image-Text Retrieval Embdding with BLIP

+# Image Captioning with BLIP

*author: David Wang*

@@ -9,7 +9,7 @@

## Description

-This operator extracts features for image or text with [BLIP](https://arxiv.org/abs/2201.12086) which can generate embeddings for text and image by jointly training an image encoder and text encoder to maximize the cosine similarity. This is a adaptation from [salesforce/BLIP](https://github.com/salesforce/BLIP).

+This operator generates the caption with [BLIP](https://arxiv.org/abs/2201.12086) which describes the content of the given image. This is an adaptation from [salesforce/BLIP](https://github.com/salesforce/BLIP).

@@ -17,45 +17,33 @@ This operator extracts features for image or text with [BLIP](https://arxiv.org/

## Code Example

-Load an image from path './teddy.jpg' to generate an image embedding.

-

-Read the text 'A teddybear on a skateboard in Times Square.' to generate an text embedding.

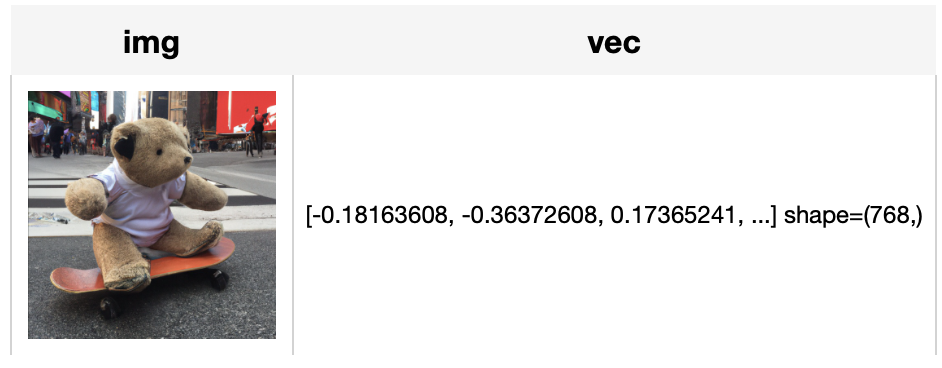

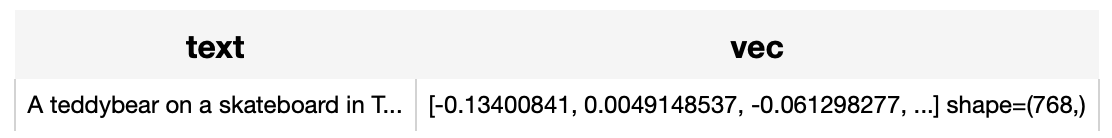

+Load an image from path './animals.jpg' to generate the caption.

*Write the pipeline in simplified style*:

```python

import towhee

-towhee.glob('./teddy.jpg') \

+towhee.glob('./animals.jpg') \

.image_decode() \

- .image_text_embedding.blip(model_name='blip_base', modality='image') \

- .show()

-

-towhee.dc(["A teddybear on a skateboard in Times Square."]) \

- .image_text_embedding.blip(model_name='blip_base', modality='text') \

+ .image_captioning.blip(model_name='blip_base') \

+ .select() \

.show()

```

- -

- +

+ *Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

-towhee.glob['path']('./teddy.jpg') \

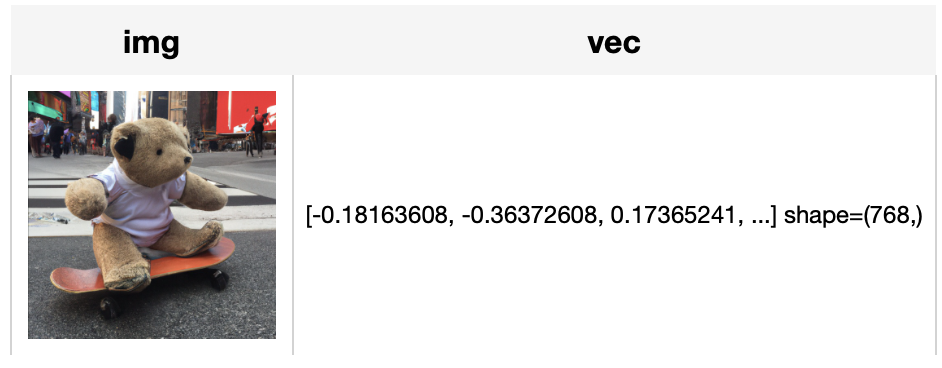

+towhee.glob['path']('./animals.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.blip['img', 'vec'](model_name='blip_base', modality='image') \

- .select['img', 'vec']() \

- .show()

-

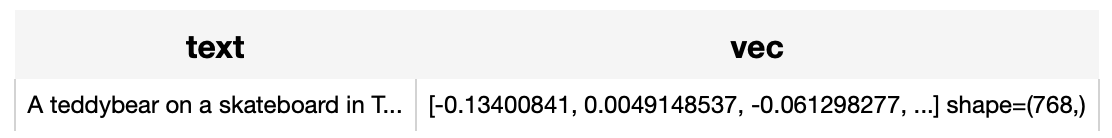

-towhee.dc['text'](["A teddybear on a skateboard in Times Square."]) \

- .image_text_embedding.blip['text','vec'](model_name='blip_base', modality='text') \

- .select['text', 'vec']() \

+ .image_captioning.blip['img', 'text'](model_name='blip_base') \

+ .select['img', 'text']() \

.show()

```

-

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

-towhee.glob['path']('./teddy.jpg') \

+towhee.glob['path']('./animals.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.blip['img', 'vec'](model_name='blip_base', modality='image') \

- .select['img', 'vec']() \

- .show()

-

-towhee.dc['text'](["A teddybear on a skateboard in Times Square."]) \

- .image_text_embedding.blip['text','vec'](model_name='blip_base', modality='text') \

- .select['text', 'vec']() \

+ .image_captioning.blip['img', 'text'](model_name='blip_base') \

+ .select['img', 'text']() \

.show()

```

- -

- +

+

@@ -66,7 +54,7 @@ towhee.dc['text'](["A teddybear on a skateboard in Times Square."]) \

Create the operator via the following factory method

-***blip(model_name, modality)***

+***blip(model_name)***

**Parameters:**

@@ -75,33 +63,23 @@ Create the operator via the following factory method

The model name of BLIP. Supported model names:

- blip_base

-

- ***modality:*** *str*

-

- Which modality(*image* or *text*) is used to generate the embedding.

-

## Interface

-An image-text embedding operator takes a [towhee image](link/to/towhee/image/api/doc) or string as input and generate an embedding in ndarray.

+An image-text embedding operator takes a [towhee image](link/to/towhee/image/api/doc) as input and generate the correspoing caption.

**Parameters:**

***data:*** *towhee.types.Image (a sub-class of numpy.ndarray)* or *str*

- The data (image or text based on specified modality) to generate embedding.

-

-

-

-**Returns:** *numpy.ndarray*

-

- The data embedding extracted by model.

-

+ The image to generate embedding.

+**Returns:** *str*

+ The caption generated by model.

diff --git a/cap.png b/cap.png

new file mode 100644

index 0000000..0ed342d

Binary files /dev/null and b/cap.png differ

diff --git a/tabular.png b/tabular.png

new file mode 100644

index 0000000..b7c6c16

Binary files /dev/null and b/tabular.png differ

diff --git a/tabular1.png b/tabular1.png

deleted file mode 100644

index 8d1db62..0000000

Binary files a/tabular1.png and /dev/null differ

diff --git a/tabular2.png b/tabular2.png

deleted file mode 100644

index 26a46a6..0000000

Binary files a/tabular2.png and /dev/null differ

diff --git a/vec1.png b/vec1.png

deleted file mode 100644

index b0022d1..0000000

Binary files a/vec1.png and /dev/null differ

diff --git a/vec2.png b/vec2.png

deleted file mode 100644

index 1e2adc0..0000000

Binary files a/vec2.png and /dev/null differ

-

- +

+ *Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

-towhee.glob['path']('./teddy.jpg') \

+towhee.glob['path']('./animals.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.blip['img', 'vec'](model_name='blip_base', modality='image') \

- .select['img', 'vec']() \

- .show()

-

-towhee.dc['text'](["A teddybear on a skateboard in Times Square."]) \

- .image_text_embedding.blip['text','vec'](model_name='blip_base', modality='text') \

- .select['text', 'vec']() \

+ .image_captioning.blip['img', 'text'](model_name='blip_base') \

+ .select['img', 'text']() \

.show()

```

-

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

-towhee.glob['path']('./teddy.jpg') \

+towhee.glob['path']('./animals.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.blip['img', 'vec'](model_name='blip_base', modality='image') \

- .select['img', 'vec']() \

- .show()

-

-towhee.dc['text'](["A teddybear on a skateboard in Times Square."]) \

- .image_text_embedding.blip['text','vec'](model_name='blip_base', modality='text') \

- .select['text', 'vec']() \

+ .image_captioning.blip['img', 'text'](model_name='blip_base') \

+ .select['img', 'text']() \

.show()

```

- -

- +

+