# Image Captioning with CaMEL

*author: David Wang*

## Description

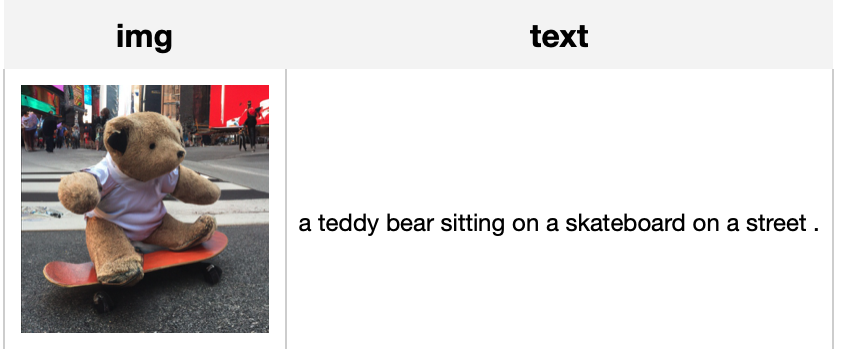

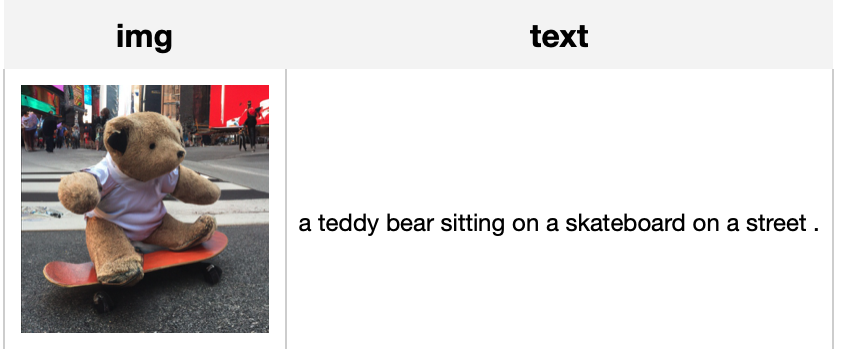

This operator generates the caption with [CaMEL](https://arxiv.org/abs/2202.10492) which describes the content of the given image. CaMEL is a novel Transformer-based architecture for image captioning which leverages the interaction of two interconnected language models that learn from each other during the training phase. The interplay between the two language models follows a mean teacher learning paradigm with knowledge distillation. This is an adaptation from [aimagelab/camel](https://github.com/aimagelab/camel).

## Code Example

Load an image from path './image.jpg' to generate the caption.

*Write the pipeline in simplified style*:

```python

import towhee

towhee.glob('./image.jpg') \

.image_decode() \

.image_captioning.camel(model_name='camel_mesh') \

.show()

```

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./image.jpg') \

.image_decode['path', 'img']() \

.image_captioning.camel['img', 'text'](model_name='camel_mesh') \

.select['img', 'text']() \

.show()

```

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./image.jpg') \

.image_decode['path', 'img']() \

.image_captioning.camel['img', 'text'](model_name='camel_mesh') \

.select['img', 'text']() \

.show()

```

## Factory Constructor

Create the operator via the following factory method

***camel(model_name)***

**Parameters:**

***model_name:*** *str*

The model name of CaMEL. Supported model names:

- camel_mesh

## Interface

An image captioning operator takes a [towhee image](link/to/towhee/image/api/doc) as input and generate the correspoing caption.

**Parameters:**

***data:*** *towhee.types.Image (a sub-class of numpy.ndarray)*

The image to generate caption.

**Returns:** *str*

The caption generated by model.

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./image.jpg') \

.image_decode['path', 'img']() \

.image_captioning.camel['img', 'text'](model_name='camel_mesh') \

.select['img', 'text']() \

.show()

```

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./image.jpg') \

.image_decode['path', 'img']() \

.image_captioning.camel['img', 'text'](model_name='camel_mesh') \

.select['img', 'text']() \

.show()

```