copied

Readme

Files and versions

4.4 KiB

Image Embedding with data2vec

author: David Wang

Description

This operator extracts features for image with data2vec. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture.

Code Example

Load an image from path './towhee.jpg' to generate an image embedding.

Write a pipeline with explicit inputs/outputs name specifications:

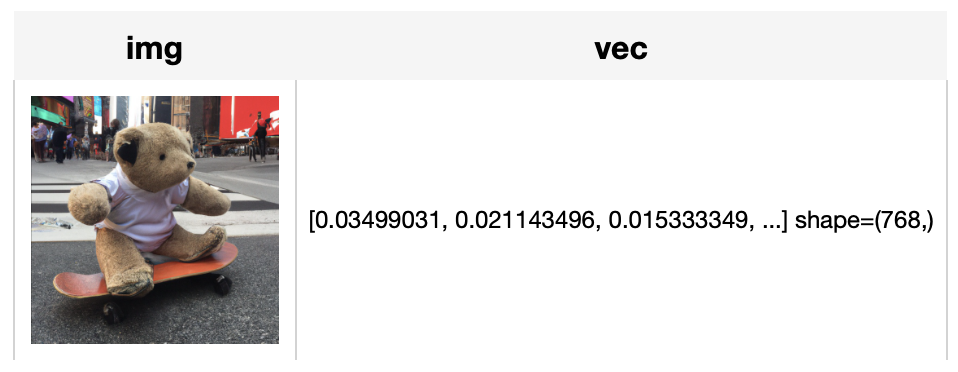

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'img', ops.image_decode())

.map('img', 'vec', ops.image_embedding.data2vec(model_name='facebook/data2vec-vision-base-ft1k'))

.output('img', 'vec')

)

DataCollection(p('towhee.jpeg')).show()

Factory Constructor

Create the operator via the following factory method

data2vec(model_name='facebook/data2vec-vision-base')

Parameters:

model_name: str

The model name in string. The default value is "facebook/data2vec-vision-base-ft1k".

Supported model name:

- facebook/data2vec-vision-base-ft1k

- facebook/data2vec-vision-large-ft1k

Interface

An image embedding operator takes a towhee image as input. It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

Parameters:

img: towhee.types.Image (a sub-class of numpy.ndarray)

The decoded image data in towhee.types.Image (numpy.ndarray).

Returns: numpy.ndarray

The image embedding extracted by model.

More Resources+

+- What is a Transformer Model? An Engineer's Guide: A transformer model is a neural network architecture. It's proficient in converting a particular type of input into a distinct output. Its core strength lies in its ability to handle inputs and outputs of different sequence length. It does this through encoding the input into a matrix with predefined dimensions and then combining that with another attention matrix to decode. This transformation unfolds through a sequence of collaborative layers, which deconstruct words into their corresponding numerical representations. At its heart, a transformer model is a bridge between disparate linguistic structures, employing sophisticated neural network configurations to decode and manipulate human language input. An example of a transformer model is GPT-3, which ingests human language and generates text output.

- How to Get the Right Vector Embeddings - Zilliz blog: A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- Transforming Text: The Rise of Sentence Transformers in NLP - Zilliz blog: Everything you need to know about the Transformers model, exploring its architecture, implementation, and limitations

- What Are Vector Embeddings?: Learn the definition of vector embeddings, how to create vector embeddings, and more.

- What is Detection Transformers (DETR)? - Zilliz blog: DETR (DEtection TRansformer) is a deep learning model for end-to-end object detection using transformers.

- Image Embeddings for Enhanced Image Search - Zilliz blog: Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.

- Enhancing Information Retrieval with Sparse Embeddings | Zilliz Learn - Zilliz blog: Explore the inner workings, advantages, and practical applications of learned sparse embeddings with the Milvus vector database

- An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog: In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.

4.4 KiB

Image Embedding with data2vec

author: David Wang

Description

This operator extracts features for image with data2vec. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture.

Code Example

Load an image from path './towhee.jpg' to generate an image embedding.

Write a pipeline with explicit inputs/outputs name specifications:

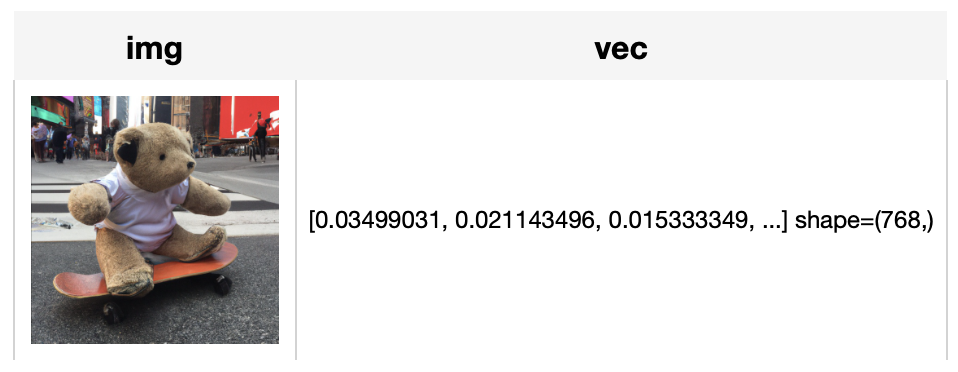

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'img', ops.image_decode())

.map('img', 'vec', ops.image_embedding.data2vec(model_name='facebook/data2vec-vision-base-ft1k'))

.output('img', 'vec')

)

DataCollection(p('towhee.jpeg')).show()

Factory Constructor

Create the operator via the following factory method

data2vec(model_name='facebook/data2vec-vision-base')

Parameters:

model_name: str

The model name in string. The default value is "facebook/data2vec-vision-base-ft1k".

Supported model name:

- facebook/data2vec-vision-base-ft1k

- facebook/data2vec-vision-large-ft1k

Interface

An image embedding operator takes a towhee image as input. It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

Parameters:

img: towhee.types.Image (a sub-class of numpy.ndarray)

The decoded image data in towhee.types.Image (numpy.ndarray).

Returns: numpy.ndarray

The image embedding extracted by model.

More Resources+

+- What is a Transformer Model? An Engineer's Guide: A transformer model is a neural network architecture. It's proficient in converting a particular type of input into a distinct output. Its core strength lies in its ability to handle inputs and outputs of different sequence length. It does this through encoding the input into a matrix with predefined dimensions and then combining that with another attention matrix to decode. This transformation unfolds through a sequence of collaborative layers, which deconstruct words into their corresponding numerical representations. At its heart, a transformer model is a bridge between disparate linguistic structures, employing sophisticated neural network configurations to decode and manipulate human language input. An example of a transformer model is GPT-3, which ingests human language and generates text output.

- How to Get the Right Vector Embeddings - Zilliz blog: A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- Transforming Text: The Rise of Sentence Transformers in NLP - Zilliz blog: Everything you need to know about the Transformers model, exploring its architecture, implementation, and limitations

- What Are Vector Embeddings?: Learn the definition of vector embeddings, how to create vector embeddings, and more.

- What is Detection Transformers (DETR)? - Zilliz blog: DETR (DEtection TRansformer) is a deep learning model for end-to-end object detection using transformers.

- Image Embeddings for Enhanced Image Search - Zilliz blog: Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.

- Enhancing Information Retrieval with Sparse Embeddings | Zilliz Learn - Zilliz blog: Explore the inner workings, advantages, and practical applications of learned sparse embeddings with the Milvus vector database

- An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog: In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.