# Image Embedding with ISC

*author: Jael Gu*

## Description

An image embedding operator generates a vector given an image.

This operator extracts features for image top ranked models from

[Image Similarity Challenge 2021](https://github.com/facebookresearch/isc2021) - Descriptor Track.

The default pretrained model weights are from [The 1st Place Solution of ISC21 (Descriptor Track)](https://github.com/lyakaap/ISC21-Descriptor-Track-1st).

## Code Example

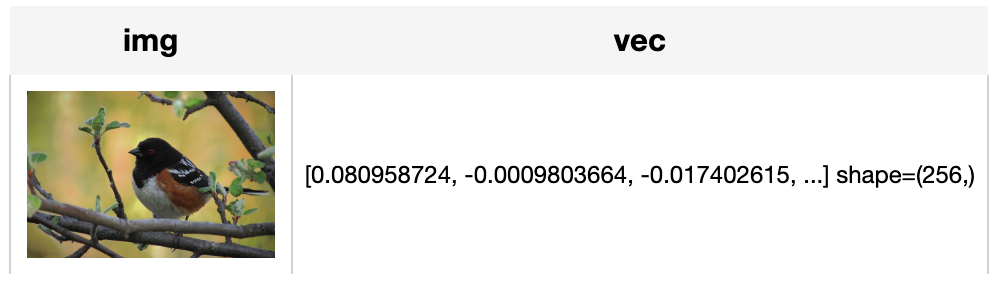

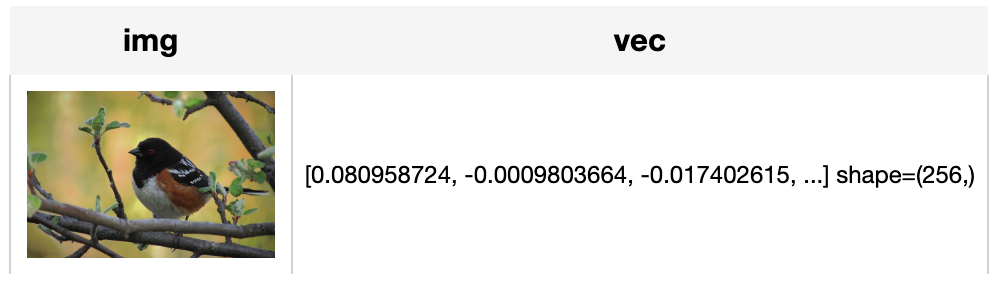

Load an image from path './towhee.jpg'

and use the pretrained ISC model to generate an image embedding.

*Write a pipeline with explicit inputs/outputs name specifications:*

```python

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'img', ops.image_decode())

.map('img', 'vec', ops.image_embedding.isc())

.output('img', 'vec')

)

DataCollection(p('towhee.jpg')).show()

```

## Factory Constructor

Create the operator via the following factory method

***image_embedding.isc(skip_preprocess=False, device=None)***

**Parameters:**

***skip_preprocess:*** *bool*

The flag to control whether to skip image preprocess.

The default value is False.

If set to True, it will skip image preprocessing steps (transforms).

In this case, input image data must be prepared in advance in order to properly fit the model.

***device:*** *str*

The device to run this operator, defaults to None.

When it is None, 'cuda' will be used if it is available, otherwise 'cpu' is used.

## Interface

An image embedding operator takes a towhee image as input.

It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

**Parameters:**

***img:*** *towhee.types.Image (a sub-class of numpy.ndarray)*

The decoded image data in numpy.ndarray.

**Returns:** *numpy.ndarray*

The image embedding extracted by model.

***save_model(format='pytorch', path='default')***

Save model to local with specified format.

**Parameters:**

***format***: *str*

The format of saved model, defaults to 'pytorch'.

***path***: *str*

The path where model is saved to. By default, it will save model to the operator directory.

```python

from towhee import ops

op = ops.image_embedding.isc(device='cpu').get_op()

op.save_model('onnx', 'test.onnx')

```

## Fine-tune

### Requirements

If you want to fine tune this operator, make sure your timm version is 0.4.12, and the higher version may cause model collapse during training.

```python

! python -m pip install tqdm augly timm==0.4.12 pytorch-metric-learning==0.9.99

```

### Download dataset

ISC is trained using [contrastive learning](https://lilianweng.github.io/posts/2021-05-31-contrastive/), which is a type of self-supervised training. The training images do not require any labels. We only need to prepare a folder `./training_images`, under which a large number of diverse training images can be stored.

In the original training of [ISC21-Descriptor-Track-1st](https://github.com/lyakaap/ISC21-Descriptor-Track-1st), the training dataset is a huge dataset which takes more than 165G space. And it uses [multi-steps training strategy](https://arxiv.org/abs/2104.00298).

In our fine-tune example, to simplification, we prepare a small dataset to run, and you can replace it with your own custom dataset.

```python

! curl -L https://github.com/towhee-io/examples/releases/download/data/isc_training_image_examples.zip -o ./training_images.zip

! unzip -q -o ./training_images.zip

```

### Get started to fine-tune

Just call method op.train() and pass in your args.

```python

import towhee

op = towhee.ops.image_embedding.isc().get_op()

op.train(training_args={

'train_data_dir': './training_images',

'distributed': False,

'output_dir': './output',

'gpu': 0,

'epochs': 2,

'batch_size': 8,

'init_lr': 0.1

})

```

These are each arg infos in training_args:

- output_dir

- default: './output'

- metadata_dict: {'help': 'output checkpoint saving dir.'}

- distributed

- default: False

- metadata_dict: {'help': 'If true, use all gpu in your machine, else use only one gpu.'}

- gpu

- default: 0

- metadata_dict: {'help': 'When distributed is False, use this gpu No. in your machine.'}

- start_epoch

- default: 0

- metadata_dict: {'help': 'Start epoch number.'}

- epochs

- default: 6

- metadata_dict: {'help': 'End epoch number.'}

- batch_size

- default: 128

- metadata_dict: {'help': 'Total batch size in all gpu.'}

- init_lr

- default: 0.1

- metadata_dict: {'help': 'init learning rate in SGD.'}

- train_data_dir

- default: None

- metadata_dict: {'help': 'The dir containing all training data image files.'}

### Load trained model

```python

new_op = towhee.ops.image_embedding.isc(checkpoint_path='./output/checkpoint_epoch0001.pth.tar').get_op()

```

### Your custom training

Your can change [training script](https://towhee.io/image-embedding/isc/src/branch/main/train_isc.py) in your custom way.

Or your can refer to the [original repo](https://github.com/lyakaap/ISC21-Descriptor-Track-1st) and [paper](https://arxiv.org/abs/2112.04323) to learn more about contrastive learning and image instance retrieval.

# More Resources

- [Powering Semantic Search in Computer Vision with Embeddings - Zilliz blog](https://zilliz.com/learn/embedding-generation): Discover how to extract useful information from unstructured data sources in a scalable manner using embeddings.

- [How to Get the Right Vector Embeddings - Zilliz blog](https://zilliz.com/blog/how-to-get-the-right-vector-embeddings): A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- [What Are Vector Embeddings?](https://zilliz.com/glossary/vector-embeddings): Learn the definition of vector embeddings, how to create vector embeddings, and more.

- [Image Embeddings for Enhanced Image Search - Zilliz blog](https://zilliz.com/learn/image-embeddings-for-enhanced-image-search): Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.

- [Enhancing Information Retrieval with Sparse Embeddings | Zilliz Learn - Zilliz blog](https://zilliz.com/learn/enhancing-information-retrieval-learned-sparse-embeddings): Explore the inner workings, advantages, and practical applications of learned sparse embeddings with the Milvus vector database

- [An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog](https://zilliz.com/learn/everything-you-should-know-about-vector-embeddings): In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.