# Image Embedding with MPViT

*author: Chen Zhang*

## Description

This operator extracts features for images with [Multi-Path Vision Transformer (MPViT)](https://arxiv.org/abs/2112.11010) which can generate embeddings for images. MPViT embeds features of the same size~(i.e., sequence length) with patches of different scales simultaneously by using overlapping convolutional patch embedding. Tokens of different scales are then independently fed into the Transformer encoders via multiple paths and the resulting features are aggregated, enabling both fine and coarse feature representations at the same feature level.

## Code Example

Load an image from path 'towhee.jpeg'

and use the pre-trained 'mpvit_base' model to generate an image embedding.

*Write a same pipeline with explicit inputs/outputs name specifications:*

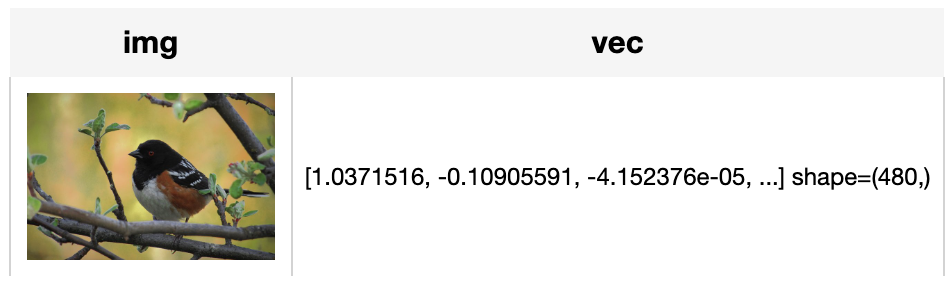

```python

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'img', ops.image_decode())

.map('img', 'vec', ops.image_embedding.mpvit(model_name='mpvit_base'))

.output('img', 'vec')

)

DataCollection(p('towhee.jpeg')).show()

```

## Factory Constructor

Create the operator via the following factory method:

***image_embedding.mpvit(model_name='mpvit_base', \*\*kwargs)***

**Parameters:**

***model_name:*** *str*

Pretrained model name include `mpvit_tiny`, `mpvit_xsmall`, `mpvit_small` or `mpvit_base`, all of which are pretrained on ImageNet-1K dataset, for more information, please refer the original [MPViT github page](https://github.com/youngwanLEE/MPViT).

***weights_path:*** *str*

Your local weights path, default is None, which means using the pretrained model weights.

***device:*** *str*

Model device, `cpu` or `cuda`.

***num_classes:*** *int*

The number of classes. The default value is 1000.

It is related to model and dataset. If you want to fine-tune this operator, you can change this value to adapt your datasets.

***skip_preprocess:*** *bool*

The flag to control whether to skip image pre-process.

The default value is False.

If set to True, it will skip image preprocessing steps (transforms).

In this case, input image data must be prepared in advance in order to properly fit the model.

## Interface

An image embedding operator takes a towhee image as input.

It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

**Parameters:**

***data:*** *towhee.types.Image*

The decoded image data in towhee Image (a subset of numpy.ndarray).

**Returns:** *numpy.ndarray*

An image embedding generated by model, in shape of (feature_dim,).

For mpvit_tiny model, feature_dim = 216.

For mpvit_xsmall model, feature_dim = 256.

For mpvit_small model, feature_dim = 288.

For mpvit_base model, feature_dim = 480.

# More Resources

- [How to Get the Right Vector Embeddings - Zilliz blog](https://zilliz.com/blog/how-to-get-the-right-vector-embeddings): A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- [What are Vision Transformers (ViT)? - Zilliz blog](https://zilliz.com/learn/understanding-vision-transformers-vit): Vision Transformers (ViTs) are neural network models that use transformers to perform computer vision tasks like object detection and image classification.

- [What Are Vector Embeddings?](https://zilliz.com/glossary/vector-embeddings): Learn the definition of vector embeddings, how to create vector embeddings, and more.

- [Image Embeddings for Enhanced Image Search - Zilliz blog](https://zilliz.com/learn/image-embeddings-for-enhanced-image-search): Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.

- [Enhancing Information Retrieval with Sparse Embeddings | Zilliz Learn - Zilliz blog](https://zilliz.com/learn/enhancing-information-retrieval-learned-sparse-embeddings): Explore the inner workings, advantages, and practical applications of learned sparse embeddings with the Milvus vector database

- [An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog](https://zilliz.com/learn/everything-you-should-know-about-vector-embeddings): In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.