# Image Embedding with Timm

*author: [Jael Gu](https://github.com/jaelgu), Filip*

## Description

An image embedding operator generates a vector given an image.

This operator extracts features for image with pre-trained models provided by [Timm](https://github.com/rwightman/pytorch-image-models).

Timm is a deep-learning library developed by [Ross Wightman](https://twitter.com/wightmanr),

who maintains SOTA deep-learning models and tools in computer vision.

## Code Example

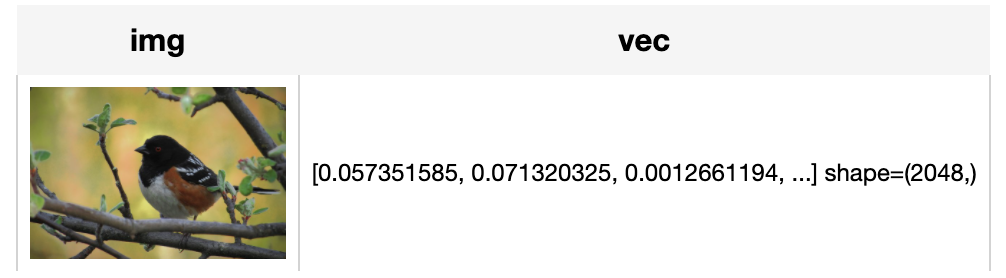

Load an image from path './towhee.jpeg'

and use the pre-trained ResNet50 model ('resnet50') to generate an image embedding.

*Write the pipeline in simplified style:*

```python

import towhee

towhee.glob('./towhee.jpeg') \

.image_decode() \

.image_embedding.timm(model_name='resnet50') \

.show()

```

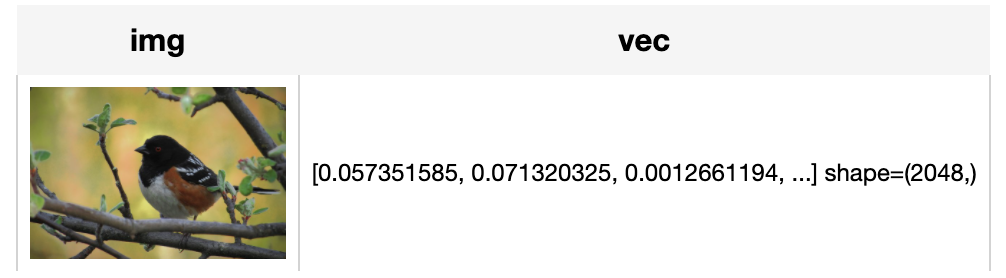

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./towhee.jpeg') \

.image_decode['path', 'img']() \

.image_embedding.timm['img', 'vec'](model_name='resnet50') \

.select['img', 'vec']() \

.show()

```

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./towhee.jpeg') \

.image_decode['path', 'img']() \

.image_embedding.timm['img', 'vec'](model_name='resnet50') \

.select['img', 'vec']() \

.show()

```

## Factory Constructor

Create the operator via the following factory method:

***image_embedding.timm(model_name='resnet34', num_classes=1000, skip_preprocess=False)***

**Parameters:**

***model_name:*** *str*

The model name in string. The default value is "resnet34".

Refer to [Timm Docs](https://fastai.github.io/timmdocs/#List-Models-with-Pretrained-Weights) to get a full list of supported models.

***num_classes:*** *int*

The number of classes. The default value is 1000.

It is related to model and dataset.

***skip_preprocess:*** *bool*

The flag to control whether to skip image pre-process.

The default value is False.

If set to True, it will skip image preprocessing steps (transforms).

In this case, input image data must be prepared in advance in order to properly fit the model.

## Interface

An image embedding operator takes a towhee image as input.

It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

**Parameters:**

***data:*** *towhee.types.Image*

The decoded image data in towhee Image (a subset of numpy.ndarray).

**Returns:** *numpy.ndarray*

An image embedding generated by model, in shape of (feature_dim,).

## Towhee Serve

Models which is supported the towhee.serve.

**Model List**

models | models | models

--------- | ---------- | ------------

adv_inception_v3 | bat_resnext26ts | beit_base_patch16_224

beit_base_patch16_224_in22k | beit_base_patch16_384 | beit_large_patch16_224

beit_large_patch16_224_in22k | beit_large_patch16_384 | beit_large_patch16_512

botnet26t_256 | cait_m36_384 | cait_m48_448

cait_s24_224 | cait_s24_384 | cait_s36_384

cait_xs24_384 | cait_xxs24_224 | cait_xxs24_384

cait_xxs36_224 | cait_xxs36_384 | coat_lite_mini

coat_lite_small | coat_lite_tiny | convit_base

convit_small | convit_tiny | convmixer_768_32

convmixer_1024_20_ks9_p14 | convmixer_1536_20 | convnext_base

convnext_base_384_in22ft1k | convnext_base_in22ft1k | convnext_base_in22k

convnext_large | convnext_large_384_in22ft1k | convnext_large_in22ft1k

convnext_large_in22k | convnext_small | convnext_small_384_in22ft1k

convnext_small_in22ft1k | convnext_small_in22k | convnext_tiny

convnext_tiny_384_in22ft1k | convnext_tiny_hnf | convnext_tiny_in22ft1k

convnext_tiny_in22k | convnext_xlarge_384_in22ft1k | convnext_xlarge_in22ft1k

convnext_xlarge_in22k | cs3darknet_focus_l | cs3darknet_focus_m

cs3darknet_l | cs3darknet_m | cspdarknet53

cspresnet50 | cspresnext50 | darknet53

deit3_base_patch16_224 | deit3_base_patch16_224_in21ft1k | deit3_base_patch16_384

deit3_base_patch16_384_in21ft1k | deit3_huge_patch14_224 | deit3_huge_patch14_224_in21ft1k

deit3_large_patch16_224 | deit3_large_patch16_224_in21ft1k | deit3_large_patch16_384

deit3_large_patch16_384_in21ft1k | deit3_small_patch16_224 | deit3_small_patch16_224_in21ft1k

deit3_small_patch16_384 | deit3_small_patch16_384_in21ft1k | deit_base_distilled_patch16_224

deit_base_distilled_patch16_384 | deit_base_patch16_224 | deit_base_patch16_384

deit_small_distilled_patch16_224 | deit_small_patch16_224 | deit_tiny_distilled_patch16_224

deit_tiny_patch16_224 | densenet121 | densenet161

densenet169 | densenet201 | densenetblur121d

dla34 | dla46_c | dla46x_c

dla60 | dla60_res2net | dla60_res2next

dla60x | dla60x_c | dla102

dla102x | dla102x2 | dla169

dm_nfnet_f0 | dm_nfnet_f1 | dm_nfnet_f2

dm_nfnet_f3 | dm_nfnet_f4 | dm_nfnet_f5

dm_nfnet_f6 | dpn68 | dpn68b

dpn92 | dpn98 | dpn107

dpn131 | eca_botnext26ts_256 | eca_halonext26ts

eca_nfnet_l0 | eca_nfnet_l1 | eca_nfnet_l2

eca_resnet33ts | eca_resnext26ts | ecaresnet26t

ecaresnet50t | ecaresnet269d | edgenext_small

edgenext_small_rw | edgenext_x_small | edgenext_xx_small

efficientnet_b0 | efficientnet_b1 | efficientnet_b2

efficientnet_b3 | efficientnet_b4 | efficientnet_el

efficientnet_el_pruned | efficientnet_em | efficientnet_es

efficientnet_es_pruned | efficientnet_lite0 | efficientnetv2_rw_m

efficientnetv2_rw_s | efficientnetv2_rw_t | ens_adv_inception_resnet_v2

ese_vovnet19b_dw | ese_vovnet39b | fbnetc_100

fbnetv3_b | fbnetv3_d | fbnetv3_g

gc_efficientnetv2_rw_t | gcresnet33ts | gcresnet50t

gcresnext26ts | gcresnext50ts | gernet_l

gernet_m | gernet_s | ghostnet_100

gluon_inception_v3 | gluon_resnet18_v1b | gluon_resnet34_v1b

gluon_resnet50_v1b | gluon_resnet50_v1c | gluon_resnet50_v1d

gluon_resnet50_v1s | gluon_resnet101_v1b | gluon_resnet101_v1c

gluon_resnet101_v1d | gluon_resnet101_v1s | gluon_resnet152_v1b

gluon_resnet152_v1c | gluon_resnet152_v1d | gluon_resnet152_v1s

gluon_resnext50_32x4d | gluon_resnext101_32x4d | gluon_resnext101_64x4d

gluon_senet154 | gluon_seresnext50_32x4d | gluon_seresnext101_32x4d

gluon_seresnext101_64x4d | gluon_xception65 | gmlp_s16_224

halo2botnet50ts_25

halo2botnet50ts_256 | halonet26t | halonet50ts

haloregnetz_b | hardcorenas_a | hardcorenas_b

hardcorenas_c | hardcorenas_d | hardcorenas_e

hrnet_w18 | hrnet_w18_small | hrnet_w18_small_v2

hrnet_w30 | hrnet_w32 | hrnet_w40

hrnet_w44 | hrnet_w48 | hrnet_w64

ig_resnext101_32x8d | ig_resnext101_32x16d | ig_resnext101_32x32d

ig_resnext101_32x48d | inception_resnet_v2 | inception_v3

inception_v4 | jx_nest_base | jx_nest_small

jx_nest_tiny | lambda_resnet26rpt_256 | lambda_resnet26t

lambda_resnet50ts | lamhalobotnet50ts_256 | lcnet_050

lcnet_075 | lcnet_100 | legacy_seresnet18

legacy_seresnet34 | legacy_seresnet50 | legacy_seresnet101

legacy_seresnet152 | legacy_seresnext26_32x4d | levit_128

levit_128s | levit_192 | levit_256

levit_384 | mixer_b16_224 | mixer_b16_224_in21k

mixer_b16_224_miil | mixer_b16_224_miil_in21k | mixer_l16_224

mixer_l16_224_in21k | mixnet_l | mixnet_m

mixnet_s | mixnet_xl | mnasnet_100

mnasnet_small | mobilenetv2_050 | mobilenetv2_100

mobilenetv2_110d | mobilenetv2_120d | mobilenetv2_140

mobilenetv3_large_100 | mobilenetv3_large_100_miil | mobilenetv3_large_100_miil_in21k

mobilenetv3_rw | mobilenetv3_small_050 | mobilenetv3_small_075

mobilenetv3_small_100 | mobilevit_s | mobilevit_xs

mobilevit_xxs | mobilevitv2_050 | mobilevitv2_075

mobilevitv2_100 | mobilevitv2_125 | mobilevitv2_150

mobilevitv2_150_384_in22ft1k | mobilevitv2_150_in22ft1k | mobilevitv2_175

mobilevitv2_175_384_in22ft1k | mobilevitv2_175_in22ft1k | mobilevitv2_200

mobilevitv2_200_384_in22ft1k | mobilevitv2_200_in22ft1k | nf_regnet_b1

nf_resnet50 | nfnet_l0 | pit_b_224

pit_b_distilled_224 | pit_s_224 | pit_s_distilled_224

pit_ti_224 | pit_ti_distilled_224 | pit_xs_224

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./towhee.jpeg') \

.image_decode['path', 'img']() \

.image_embedding.timm['img', 'vec'](model_name='resnet50') \

.select['img', 'vec']() \

.show()

```

*Write a same pipeline with explicit inputs/outputs name specifications:*

```python

import towhee

towhee.glob['path']('./towhee.jpeg') \

.image_decode['path', 'img']() \

.image_embedding.timm['img', 'vec'](model_name='resnet50') \

.select['img', 'vec']() \

.show()

```