diff --git a/README.md b/README.md

index c2eb97d..333ca49 100644

--- a/README.md

+++ b/README.md

@@ -1,4 +1,4 @@

-#Japanese Image-Text Retrieval Embdding with CLIP

+# Japanese Image-Text Retrieval Embdding with CLIP

*author: David Wang*

@@ -28,15 +28,15 @@ import towhee

towhee.glob('./teddy.jpg') \

.image_decode() \

- .image_text_embedding.japanese_clip(model_name='clip_vit_b32', modality='image') \

+ .image_text_embedding.japanese_clip(model_name='japanese-clip-vit-b-16', modality='image') \

.show()

towhee.dc(["スケートボードに乗っているテディベア。"]) \

- .image_text_embedding.japanese_clip(model_name='clip_vit_b32', modality='text') \

+ .image_text_embedding.japanese_clip(model_name='japanese-clip-vit-b-16', modality='text') \

.show()

```

- -

- +

+ +

+ *Write a same pipeline with explicit inputs/outputs name specifications:*

@@ -45,17 +45,17 @@ import towhee

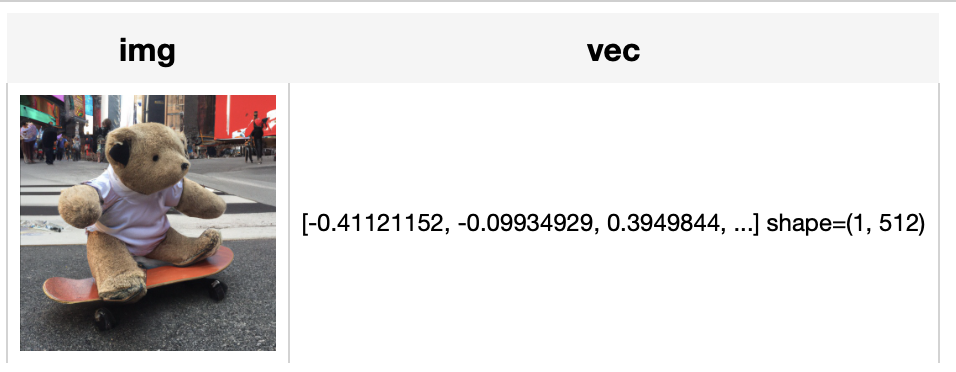

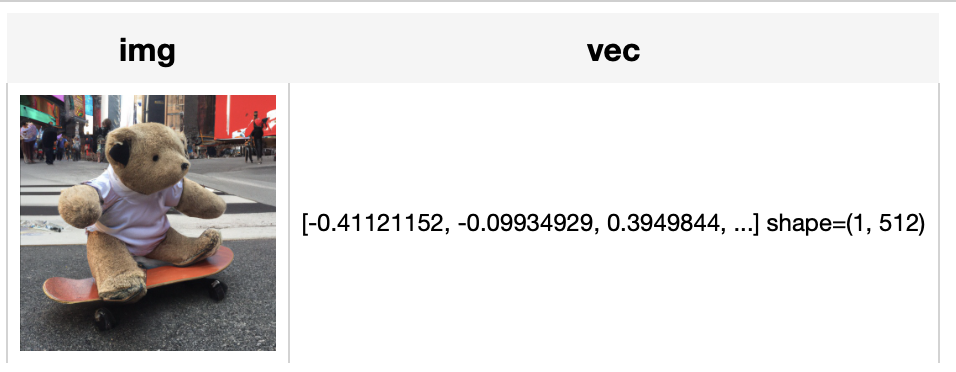

towhee.glob['path']('./teddy.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.japanese_clip['img', 'vec'](model_name='clip_vit_b32', modality='image') \

+ .image_text_embedding.japanese_clip['img', 'vec'](model_name='japanese-clip-vit-b-16', modality='image') \

.select['img', 'vec']() \

.show()

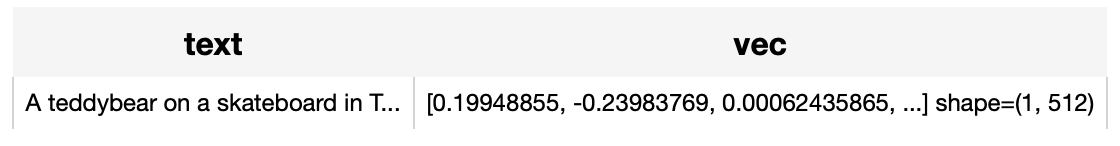

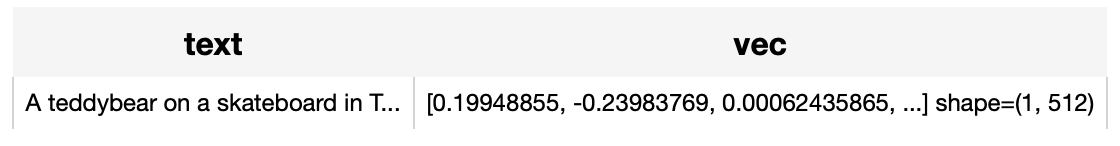

towhee.dc['text'](["スケートボードに乗っているテディベア。"]) \

- .image_text_embedding.japanese_clip['text','vec'](model_name='clip_vit_b32', modality='text') \

+ .image_text_embedding.japanese_clip['text','vec'](model_name='japanese-clip-vit-b-16', modality='text') \

.select['text', 'vec']() \

.show()

```

-

*Write a same pipeline with explicit inputs/outputs name specifications:*

@@ -45,17 +45,17 @@ import towhee

towhee.glob['path']('./teddy.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.japanese_clip['img', 'vec'](model_name='clip_vit_b32', modality='image') \

+ .image_text_embedding.japanese_clip['img', 'vec'](model_name='japanese-clip-vit-b-16', modality='image') \

.select['img', 'vec']() \

.show()

towhee.dc['text'](["スケートボードに乗っているテディベア。"]) \

- .image_text_embedding.japanese_clip['text','vec'](model_name='clip_vit_b32', modality='text') \

+ .image_text_embedding.japanese_clip['text','vec'](model_name='japanese-clip-vit-b-16', modality='text') \

.select['text', 'vec']() \

.show()

```

- -

- +

+ +

+

diff --git a/tabular1.png b/tabular1.png

new file mode 100644

index 0000000..acf1386

Binary files /dev/null and b/tabular1.png differ

diff --git a/tabular2.png b/tabular2.png

new file mode 100644

index 0000000..b85cef4

Binary files /dev/null and b/tabular2.png differ

diff --git a/vec1.png b/vec1.png

new file mode 100644

index 0000000..5dca0f9

Binary files /dev/null and b/vec1.png differ

diff --git a/vec2.png b/vec2.png

new file mode 100644

index 0000000..81d431b

Binary files /dev/null and b/vec2.png differ

-

- +

+ +

+ *Write a same pipeline with explicit inputs/outputs name specifications:*

@@ -45,17 +45,17 @@ import towhee

towhee.glob['path']('./teddy.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.japanese_clip['img', 'vec'](model_name='clip_vit_b32', modality='image') \

+ .image_text_embedding.japanese_clip['img', 'vec'](model_name='japanese-clip-vit-b-16', modality='image') \

.select['img', 'vec']() \

.show()

towhee.dc['text'](["スケートボードに乗っているテディベア。"]) \

- .image_text_embedding.japanese_clip['text','vec'](model_name='clip_vit_b32', modality='text') \

+ .image_text_embedding.japanese_clip['text','vec'](model_name='japanese-clip-vit-b-16', modality='text') \

.select['text', 'vec']() \

.show()

```

-

*Write a same pipeline with explicit inputs/outputs name specifications:*

@@ -45,17 +45,17 @@ import towhee

towhee.glob['path']('./teddy.jpg') \

.image_decode['path', 'img']() \

- .image_text_embedding.japanese_clip['img', 'vec'](model_name='clip_vit_b32', modality='image') \

+ .image_text_embedding.japanese_clip['img', 'vec'](model_name='japanese-clip-vit-b-16', modality='image') \

.select['img', 'vec']() \

.show()

towhee.dc['text'](["スケートボードに乗っているテディベア。"]) \

- .image_text_embedding.japanese_clip['text','vec'](model_name='clip_vit_b32', modality='text') \

+ .image_text_embedding.japanese_clip['text','vec'](model_name='japanese-clip-vit-b-16', modality='text') \

.select['text', 'vec']() \

.show()

```

- -

- +

+ +

+