copied

Readme

Files and versions

2.1 KiB

Image-Text Retrieval Embdding with SLIP

author: David Wang

Description

This operator extracts features for image or text with SLIP, a multi-task learning framework for combining self-supervised learning and CLIP pre-training. This is an adaptation from facebookresearch/SLIP.

Code Example

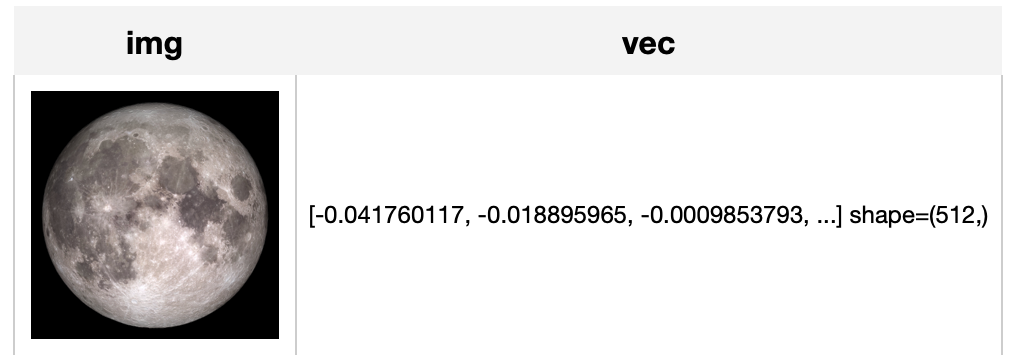

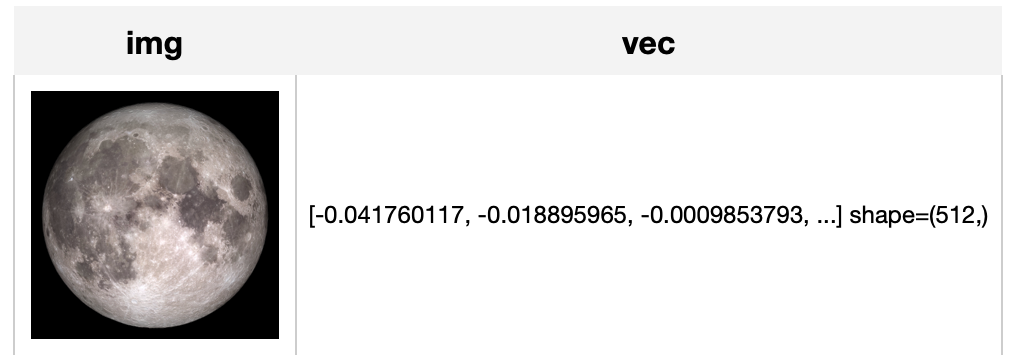

Load an image from path './moon.jpg' to generate an image embedding.

Read the text 'moon in the night.' to generate a text embedding.

Write a pipeline with explicit inputs/outputs name specifications:

from towhee.dc2 import pipe, ops, DataCollection

img_pipe = (

pipe.input('url')

.map('url', 'img', ops.image_decode.cv2_rgb())

.map('img', 'vec', ops.image_text_embedding.slip(model_name='slip_vit_small', modality='image'))

.output('img', 'vec')

)

text_pipe = (

pipe.input('text')

.map('text', 'vec', ops.image_text_embedding.slip(model_name='slip_vit_small', modality='text'))

.output('text', 'vec')

)

DataCollection(img_pipe('./moon.jpg')).show()

DataCollection(text_pipe('moon in the night.')).show()

Factory Constructor

Create the operator via the following factory method

slip(model_name, modality)

Parameters:

model_name: str

The model name of SLIP. Supported model names:

- slip_vit_small

- slip_vit_base

- slip_vit_large

modality: str

Which modality(image or text) is used to generate the embedding.

Interface

An image-text embedding operator takes a towhee image or string as input and generate an embedding in ndarray.

Parameters:

data: towhee.types.Image (a sub-class of numpy.ndarray) or str

The data (image or text based on specified modality) to generate embedding.

Returns: numpy.ndarray

The data embedding extracted by model.

2.1 KiB

Image-Text Retrieval Embdding with SLIP

author: David Wang

Description

This operator extracts features for image or text with SLIP, a multi-task learning framework for combining self-supervised learning and CLIP pre-training. This is an adaptation from facebookresearch/SLIP.

Code Example

Load an image from path './moon.jpg' to generate an image embedding.

Read the text 'moon in the night.' to generate a text embedding.

Write a pipeline with explicit inputs/outputs name specifications:

from towhee.dc2 import pipe, ops, DataCollection

img_pipe = (

pipe.input('url')

.map('url', 'img', ops.image_decode.cv2_rgb())

.map('img', 'vec', ops.image_text_embedding.slip(model_name='slip_vit_small', modality='image'))

.output('img', 'vec')

)

text_pipe = (

pipe.input('text')

.map('text', 'vec', ops.image_text_embedding.slip(model_name='slip_vit_small', modality='text'))

.output('text', 'vec')

)

DataCollection(img_pipe('./moon.jpg')).show()

DataCollection(text_pipe('moon in the night.')).show()

Factory Constructor

Create the operator via the following factory method

slip(model_name, modality)

Parameters:

model_name: str

The model name of SLIP. Supported model names:

- slip_vit_small

- slip_vit_base

- slip_vit_large

modality: str

Which modality(image or text) is used to generate the embedding.

Interface

An image-text embedding operator takes a towhee image or string as input and generate an embedding in ndarray.

Parameters:

data: towhee.types.Image (a sub-class of numpy.ndarray) or str

The data (image or text based on specified modality) to generate embedding.

Returns: numpy.ndarray

The data embedding extracted by model.