copied

Readme

Files and versions

4.0 KiB

Cartoonize with CartoonGAN

author: Shiyu

Description

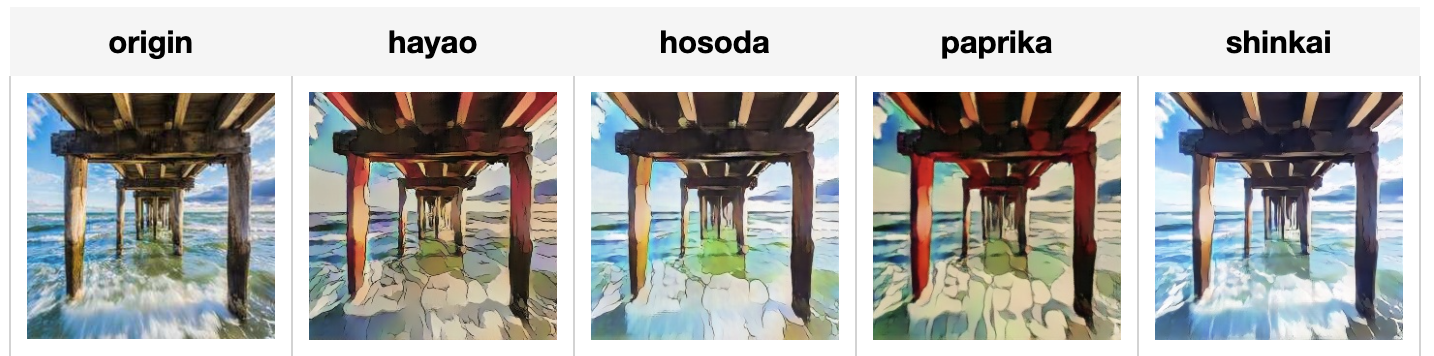

Convert an image into an cartoon image using CartoonGAN.

Code Example

Load an image from path './test.png'.

Write the pipeline in simplified style:

import towhee

towhee.glob('./test.png') \

.image_decode() \

.img2img_translation.cartoongan(model_name = 'Hayao') \

.show()

Write a pipeline with explicit inputs/outputs name specifications:

import towhee

towhee.glob['path']('./test.png') \

.image_decode['path', 'origin']() \

.img2img_translation.cartoongan['origin', 'hayao'](model_name = 'Hayao') \

.img2img_translation.cartoongan['origin', 'hosoda'](model_name = 'Hosoda') \

.img2img_translation.cartoongan['origin', 'paprika'](model_name = 'Paprika') \

.img2img_translation.cartoongan['origin', 'shinkai'](model_name = 'Shinkai') \

.select['origin', 'hayao', 'hosoda', 'paprika', 'shinkai']() \

.show()

Factory Constructor

Create the operator via the following factory method

img2img_translation.cartoongan(model_name = 'which anime model to use')

Model options:

- Hayao

- Hosoda

- Paprika

- Shinkai

Interface

Takes in a numpy rgb image in channels first. It transforms input into animated image in numpy form.

Parameters:

model_name: str

Which model to use for transfer.

framework: str

Which ML framework being used, for now only supports PyTorch.

device: str

Which device being used('cpu' or 'cuda'), defaults to 'cpu'.

Returns: towhee.types.Image (a sub-class of numpy.ndarray)

The new image.

# More Resources

- [The guide to clip-vit-base-patch32 | OpenAI](https://zilliz.com/ai-models/clip-vit-base-patch32): clip-vit-base-patch32: a CLIP multimodal model variant by OpenAI for image and text embedding.

- What is Llama 2?: Learn all about Llama 2, get how to create vector embeddings, and more.

- Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar: Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- Zilliz-Hugging Face partnership - Explore transformer data model repo: Use Hugging Faceâs community-driven repository of data models to convert unstructured data into embeddings to store in Zilliz Cloud, and access a code tutorial.

- What is a Generative Adversarial Network? An Easy Guide: Just like we classify animal fossils into domains, kingdoms, and phyla, we classify AI networks, too. At the highest level, we classify AI networks as "discriminative" and "generative." A generative neural network is an AI that creates something new. This differs from a discriminative network, which classifies something that already exists into particular buckets. Kind of like we're doing right now, by bucketing generative adversarial networks (GANs) into appropriate classifications. So, if you were in a situation where you wanted to use textual tags to create a new visual image, like with Midjourney, you'd use a generative network. However, if you had a giant pile of data that you needed to classify and tag, you'd use a discriminative model.

- Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar: Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- Zilliz partnership with PyTorch - View image search solution tutorial: Zilliz partnership with PyTorch

4.0 KiB

Cartoonize with CartoonGAN

author: Shiyu

Description

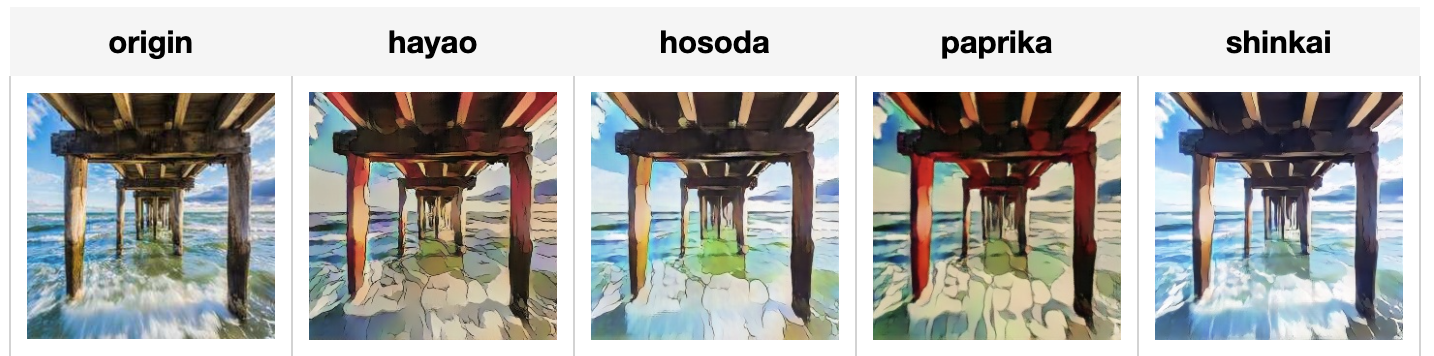

Convert an image into an cartoon image using CartoonGAN.

Code Example

Load an image from path './test.png'.

Write the pipeline in simplified style:

import towhee

towhee.glob('./test.png') \

.image_decode() \

.img2img_translation.cartoongan(model_name = 'Hayao') \

.show()

Write a pipeline with explicit inputs/outputs name specifications:

import towhee

towhee.glob['path']('./test.png') \

.image_decode['path', 'origin']() \

.img2img_translation.cartoongan['origin', 'hayao'](model_name = 'Hayao') \

.img2img_translation.cartoongan['origin', 'hosoda'](model_name = 'Hosoda') \

.img2img_translation.cartoongan['origin', 'paprika'](model_name = 'Paprika') \

.img2img_translation.cartoongan['origin', 'shinkai'](model_name = 'Shinkai') \

.select['origin', 'hayao', 'hosoda', 'paprika', 'shinkai']() \

.show()

Factory Constructor

Create the operator via the following factory method

img2img_translation.cartoongan(model_name = 'which anime model to use')

Model options:

- Hayao

- Hosoda

- Paprika

- Shinkai

Interface

Takes in a numpy rgb image in channels first. It transforms input into animated image in numpy form.

Parameters:

model_name: str

Which model to use for transfer.

framework: str

Which ML framework being used, for now only supports PyTorch.

device: str

Which device being used('cpu' or 'cuda'), defaults to 'cpu'.

Returns: towhee.types.Image (a sub-class of numpy.ndarray)

The new image.

# More Resources

- [The guide to clip-vit-base-patch32 | OpenAI](https://zilliz.com/ai-models/clip-vit-base-patch32): clip-vit-base-patch32: a CLIP multimodal model variant by OpenAI for image and text embedding.

- What is Llama 2?: Learn all about Llama 2, get how to create vector embeddings, and more.

- Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar: Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- Zilliz-Hugging Face partnership - Explore transformer data model repo: Use Hugging Faceâs community-driven repository of data models to convert unstructured data into embeddings to store in Zilliz Cloud, and access a code tutorial.

- What is a Generative Adversarial Network? An Easy Guide: Just like we classify animal fossils into domains, kingdoms, and phyla, we classify AI networks, too. At the highest level, we classify AI networks as "discriminative" and "generative." A generative neural network is an AI that creates something new. This differs from a discriminative network, which classifies something that already exists into particular buckets. Kind of like we're doing right now, by bucketing generative adversarial networks (GANs) into appropriate classifications. So, if you were in a situation where you wanted to use textual tags to create a new visual image, like with Midjourney, you'd use a generative network. However, if you had a giant pile of data that you needed to classify and tag, you'd use a discriminative model.

- Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar: Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- Zilliz partnership with PyTorch - View image search solution tutorial: Zilliz partnership with PyTorch