copied

Readme

Files and versions

Updated 1 year ago

machine-translation

Machine Translation with Opus-MT

author: David Wang

Description

A machine translation operator translates a sentence, paragraph, or document from source language to the target language. This operator is trained on OPUS data by Helsinki-NLP. More detail can be found in Helsinki-NLP/Opus-MT .

Code Example

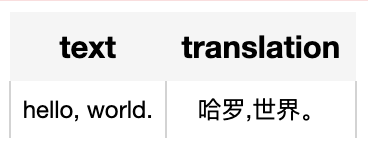

Use the pre-trained model 'opus-mt-en-zh' to generate the Chinese translation for the sentence "Hello, world.".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'translation', ops.machine_translation.opus_mt(model_name='opus-mt-en-zh'))

.output('text', 'translation')

)

DataCollection(p('hello, world.')).show()

Factory Constructor

Create the operator via the following factory method:

machine_translation.opus_mt(model_name="opus-mt-en-zh")

Parameters:

model_name: str

The model name in string. The default model name is "opus-mt-en-zh".

Supported model names:

- opus-mt-en-zh

- opus-mt-zh-en

- opus-tatoeba-en-ja

- opus-tatoeba-ja-en

- opus-mt-ru-en

- opus-mt-en-ru

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name. and then return translated text in string.

call(text)

Parameters:

text: str

The source language text in string.

Returns:

str

The target language text.

More Resources

- What is a Transformer Model? An Engineer's Guide: A transformer model is a neural network architecture. It's proficient in converting a particular type of input into a distinct output. Its core strength lies in its ability to handle inputs and outputs of different sequence length. It does this through encoding the input into a matrix with predefined dimensions and then combining that with another attention matrix to decode. This transformation unfolds through a sequence of collaborative layers, which deconstruct words into their corresponding numerical representations. At its heart, a transformer model is a bridge between disparate linguistic structures, employing sophisticated neural network configurations to decode and manipulate human language input. An example of a transformer model is GPT-3, which ingests human language and generates text output.

- Experiment with 5 Chunking Strategies via LangChain for LLM - Zilliz blog: Explore the complexities of text chunking in retrieval augmented generation applications and learn how different chunking strategies impact the same piece of data.

- Massive Text Embedding Benchmark (MTEB): A standardized way to evaluate text embedding models across a range of tasks and languages, leading to better text embedding models for your app

- About Lance Martin | Zilliz: Software / ML at LangChain

- The guide to gte-base-en-v1.5 | Alibaba: gte-base-en-v1.5: specialized for English text; Built upon the transformer++ encoder backbone (BERT + RoPE + GLU)

- What Is Semantic Search?: Semantic search is a search technique that uses natural language processing (NLP) and machine learning (ML) to understand the context and meaning behind a user's search query.

- What are LLMs? Unlocking the Secrets of GPT-4.0 and Large Language Models - Zilliz blog: Unlocking the Secrets of GPT-4.0 and Large Language Models

- Large Language Models (LLMs): What Is a Large Language Model? A Developer's Reference

|

| 10 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

3 years ago | |

README.md

README.md

|

4.0 KiB

|

1 year ago | |

__init__.py

__init__.py

|

700 B

|

3 years ago | |

opus_mt.py

opus_mt.py

|

3.0 KiB

|

3 years ago | |

requirements.txt

requirements.txt

|

52 B

|

3 years ago | |

result.png

result.png

|

13 KiB

|

3 years ago | |