copied

Readme

Files and versions

7.5 KiB

Sentence Embedding with Transformers

author: Jael Gu

Description

A sentence embedding operator generates one embedding vector in ndarray for each input text. The embedding represents the semantic information of the whole input text as one vector. This operator is implemented with pre-trained models from Huggingface Transformers.

Code Example

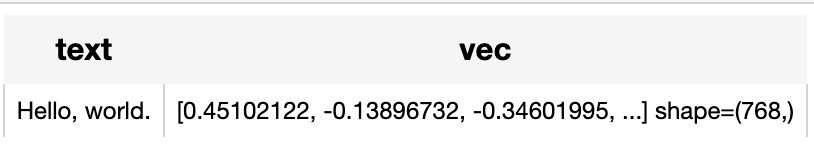

Use the pre-trained model 'sentence-transformers/paraphrase-albert-small-v2' to generate an embedding for the sentence "Hello, world.".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec',

ops.sentence_embedding.transformers(model_name='sentence-transformers/paraphrase-albert-small-v2'))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

Factory Constructor

Create the operator via the following factory method:

sentence_embedding.transformers(model_name=None)

Parameters:

model_name: str

The model name in string, defaults to None. If None, the operator will be initialized without specified model.

Supported model names: NLP transformers models listed in Huggingface Models.

Please note that only models listed in supported_model_names are tested by us.

You can refer to Towhee Pipeline for model performance.

checkpoint_path: str

The path to local checkpoint, defaults to None.

- If None, the operator will download and load pretrained model by

model_namefrom Huggingface transformers. - The checkpoint path could be a path to a directory containing model weights saved using

save_pretrained()by HuggingFace Transformers. - Or you can pass a path to a PyTorch

state_dictsave file.

tokenizer: object

The method to tokenize input text, defaults to None.

If None, the operator will use default tokenizer by model_name from HuggingFace transformers.

return_usage: bool

The flag to return token usage with call method, defaults to False. If True, call method will return a dictionary containing data (embedding).

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name, and then return a text emabedding in numpy.ndarray.

__call__(txt)

Parameters:

data: Union[str, list]

The text in string or a list of texts.

Returns:

numpy.ndarray or list

The text embedding (or token embeddings) extracted by model.

If data is string, the operator returns an embedding in numpy.ndarray with shape of (dim,).

If data is a list, the operator returns a list of embedding(s) with length of input list.

save_model(format='pytorch', path='default')

Save model to local with specified format.

Parameters:

format: str

The format to export model as, such as 'pytorch', 'torchscript', 'onnx', defaults to 'pytorch'.

path: str

The path where exported model is saved to.

By default, it will save model to saved directory under the operator cache.

from towhee import ops

op = ops.sentence_embedding.transformers(model_name='sentence-transformers/paraphrase-albert-small-v2').get_op()

op.save_model('onnx', 'test.onnx')

PosixPath('/Home/.towhee/operators/sentence-embedding/transformers/main/test.onnx')

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as 'pytorch', 'torchscript', 'onnx'.

from towhee import ops

op = ops.sentence_embedding.transformers().get_op()

full_list = op.supported_model_names()

onnx_list = op.supported_model_names(format='onnx')

Fine-tune

Requirement

If you want to train this operator, besides dependency in requirements.txt, you need install these dependencies.

! python -m pip install datasets evaluate scikit-learn

Get started

Simply speaking, you only need to construct an op instance and pass in some configurations to train the specified task.

import towhee

bert_op = towhee.ops.sentence_embedding.transformers(model_name='bert-base-uncased').get_op()

data_args = {

'dataset_name': 'wikitext',

'dataset_config_name': 'wikitext-2-raw-v1',

}

training_args = {

'num_train_epochs': 3, # you can add epoch number to get a better metric.

'per_device_train_batch_size': 8,

'per_device_eval_batch_size': 8,

'do_train': True,

'do_eval': True,

'output_dir': './tmp/test-mlm',

'overwrite_output_dir': True

}

bert_op.train(task='mlm', data_args=data_args, training_args=training_args)

For more infos, refer to the examples.

Dive deep and customize your training

You can change the training script in your customer way. Or your can refer to the original hugging face transformers training examples.

# More Resources

- [All-Mpnet-Base-V2: Enhancing Sentence Embedding with AI - Zilliz blog](https://zilliz.com/learn/all-mpnet-base-v2-enhancing-sentence-embedding-with-ai): Delve into one of the deep learning models that has played a significant role in the development of sentence embedding: MPNet.

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- Transforming Text: The Rise of Sentence Transformers in NLP - Zilliz blog: Everything you need to know about the Transformers model, exploring its architecture, implementation, and limitations

- Training Your Own Text Embedding Model - Zilliz blog: Explore how to train your text embedding model using the

sentence-transformerslibrary and generate our training data by leveraging a pre-trained LLM. - The guide to jina-embeddings-v2-base-en | Jina AI: jina-embeddings-v2-base-en: specialized embedding model for English text and long documents; support sequences of up to 8192 tokens

- What Are Vector Embeddings?: Learn the definition of vector embeddings, how to create vector embeddings, and more.

- Evaluating Your Embedding Model - Zilliz blog: Review some practical examples to evaluate different text embedding models.

- Training Text Embeddings with Jina AI - Zilliz blog: In a recent talk by Bo Wang, he discussed the creation of Jina text embeddings for modern vector search and RAG systems. He also shared methodologies for training embedding models that effectively encode extensive information, along with guidance o

7.5 KiB

Sentence Embedding with Transformers

author: Jael Gu

Description

A sentence embedding operator generates one embedding vector in ndarray for each input text. The embedding represents the semantic information of the whole input text as one vector. This operator is implemented with pre-trained models from Huggingface Transformers.

Code Example

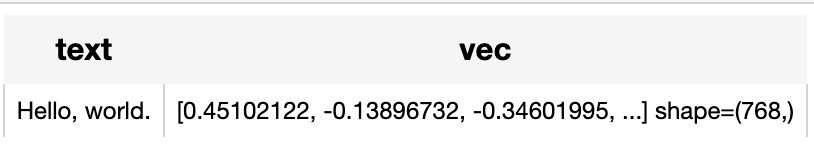

Use the pre-trained model 'sentence-transformers/paraphrase-albert-small-v2' to generate an embedding for the sentence "Hello, world.".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec',

ops.sentence_embedding.transformers(model_name='sentence-transformers/paraphrase-albert-small-v2'))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

Factory Constructor

Create the operator via the following factory method:

sentence_embedding.transformers(model_name=None)

Parameters:

model_name: str

The model name in string, defaults to None. If None, the operator will be initialized without specified model.

Supported model names: NLP transformers models listed in Huggingface Models.

Please note that only models listed in supported_model_names are tested by us.

You can refer to Towhee Pipeline for model performance.

checkpoint_path: str

The path to local checkpoint, defaults to None.

- If None, the operator will download and load pretrained model by

model_namefrom Huggingface transformers. - The checkpoint path could be a path to a directory containing model weights saved using

save_pretrained()by HuggingFace Transformers. - Or you can pass a path to a PyTorch

state_dictsave file.

tokenizer: object

The method to tokenize input text, defaults to None.

If None, the operator will use default tokenizer by model_name from HuggingFace transformers.

return_usage: bool

The flag to return token usage with call method, defaults to False. If True, call method will return a dictionary containing data (embedding).

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name, and then return a text emabedding in numpy.ndarray.

__call__(txt)

Parameters:

data: Union[str, list]

The text in string or a list of texts.

Returns:

numpy.ndarray or list

The text embedding (or token embeddings) extracted by model.

If data is string, the operator returns an embedding in numpy.ndarray with shape of (dim,).

If data is a list, the operator returns a list of embedding(s) with length of input list.

save_model(format='pytorch', path='default')

Save model to local with specified format.

Parameters:

format: str

The format to export model as, such as 'pytorch', 'torchscript', 'onnx', defaults to 'pytorch'.

path: str

The path where exported model is saved to.

By default, it will save model to saved directory under the operator cache.

from towhee import ops

op = ops.sentence_embedding.transformers(model_name='sentence-transformers/paraphrase-albert-small-v2').get_op()

op.save_model('onnx', 'test.onnx')

PosixPath('/Home/.towhee/operators/sentence-embedding/transformers/main/test.onnx')

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as 'pytorch', 'torchscript', 'onnx'.

from towhee import ops

op = ops.sentence_embedding.transformers().get_op()

full_list = op.supported_model_names()

onnx_list = op.supported_model_names(format='onnx')

Fine-tune

Requirement

If you want to train this operator, besides dependency in requirements.txt, you need install these dependencies.

! python -m pip install datasets evaluate scikit-learn

Get started

Simply speaking, you only need to construct an op instance and pass in some configurations to train the specified task.

import towhee

bert_op = towhee.ops.sentence_embedding.transformers(model_name='bert-base-uncased').get_op()

data_args = {

'dataset_name': 'wikitext',

'dataset_config_name': 'wikitext-2-raw-v1',

}

training_args = {

'num_train_epochs': 3, # you can add epoch number to get a better metric.

'per_device_train_batch_size': 8,

'per_device_eval_batch_size': 8,

'do_train': True,

'do_eval': True,

'output_dir': './tmp/test-mlm',

'overwrite_output_dir': True

}

bert_op.train(task='mlm', data_args=data_args, training_args=training_args)

For more infos, refer to the examples.

Dive deep and customize your training

You can change the training script in your customer way. Or your can refer to the original hugging face transformers training examples.

# More Resources

- [All-Mpnet-Base-V2: Enhancing Sentence Embedding with AI - Zilliz blog](https://zilliz.com/learn/all-mpnet-base-v2-enhancing-sentence-embedding-with-ai): Delve into one of the deep learning models that has played a significant role in the development of sentence embedding: MPNet.

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- Transforming Text: The Rise of Sentence Transformers in NLP - Zilliz blog: Everything you need to know about the Transformers model, exploring its architecture, implementation, and limitations

- Training Your Own Text Embedding Model - Zilliz blog: Explore how to train your text embedding model using the

sentence-transformerslibrary and generate our training data by leveraging a pre-trained LLM. - The guide to jina-embeddings-v2-base-en | Jina AI: jina-embeddings-v2-base-en: specialized embedding model for English text and long documents; support sequences of up to 8192 tokens

- What Are Vector Embeddings?: Learn the definition of vector embeddings, how to create vector embeddings, and more.

- Evaluating Your Embedding Model - Zilliz blog: Review some practical examples to evaluate different text embedding models.

- Training Text Embeddings with Jina AI - Zilliz blog: In a recent talk by Bo Wang, he discussed the creation of Jina text embeddings for modern vector search and RAG systems. He also shared methodologies for training embedding models that effectively encode extensive information, along with guidance o