copied

Readme

Files and versions

3.8 KiB

Text Embedding with data2vec

author: David Wang

Description

This operator extracts features for text with data2vec. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture.

Code Example

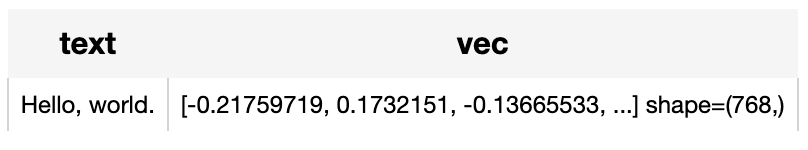

Use the pre-trained model to generate a text embedding for the sentence "Hello, world.".

*Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec', ops.text_embedding.data2vec(model_name='facebook/data2vec-text-base'))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

Factory Constructor

Create the operator via the following factory method

data2vec(model_name='facebook/data2vec-text-base')

Parameters:

model_name: str

The model name in string. The default value is "facebook/data2vec-text-base".

Supported model name:

- facebook/data2vec-text-base

Interface

Parameters:

text: str

The text in string.

Returns: numpy.ndarray

The text embedding extracted by model.

# More Resources

- [What is a Transformer Model? An Engineer's Guide](https://zilliz.com/glossary/transformer-models): A transformer model is a neural network architecture. It's proficient in converting a particular type of input into a distinct output. Its core strength lies in its ability to handle inputs and outputs of different sequence length. It does this through encoding the input into a matrix with predefined dimensions and then combining that with another attention matrix to decode. This transformation unfolds through a sequence of collaborative layers, which deconstruct words into their corresponding numerical representations.

At its heart, a transformer model is a bridge between disparate linguistic structures, employing sophisticated neural network configurations to decode and manipulate human language input. An example of a transformer model is GPT-3, which ingests human language and generates text output.

- The guide to text-embedding-ada-002 model | OpenAI: text-embedding-ada-002: OpenAI's legacy text embedding model; average price/performance compared to text-embedding-3-large and text-embedding-3-small.

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- Massive Text Embedding Benchmark (MTEB): A standardized way to evaluate text embedding models across a range of tasks and languages, leading to better text embedding models for your app

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- The guide to text-embedding-3-small | OpenAI: text-embedding-3-small: OpenAIâs small text embedding model optimized for accuracy and efficiency with a lower cost.

- Evaluating Your Embedding Model - Zilliz blog: Review some practical examples to evaluate different text embedding models.

3.8 KiB

Text Embedding with data2vec

author: David Wang

Description

This operator extracts features for text with data2vec. The core idea is to predict latent representations of the full input data based on a masked view of the input in a self-distillation setup using a standard Transformer architecture.

Code Example

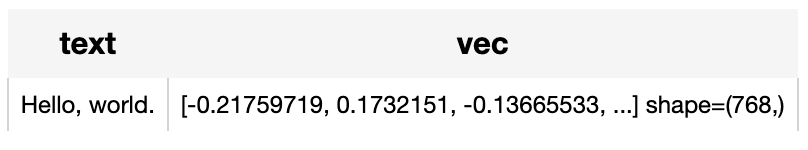

Use the pre-trained model to generate a text embedding for the sentence "Hello, world.".

*Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec', ops.text_embedding.data2vec(model_name='facebook/data2vec-text-base'))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

Factory Constructor

Create the operator via the following factory method

data2vec(model_name='facebook/data2vec-text-base')

Parameters:

model_name: str

The model name in string. The default value is "facebook/data2vec-text-base".

Supported model name:

- facebook/data2vec-text-base

Interface

Parameters:

text: str

The text in string.

Returns: numpy.ndarray

The text embedding extracted by model.

# More Resources

- [What is a Transformer Model? An Engineer's Guide](https://zilliz.com/glossary/transformer-models): A transformer model is a neural network architecture. It's proficient in converting a particular type of input into a distinct output. Its core strength lies in its ability to handle inputs and outputs of different sequence length. It does this through encoding the input into a matrix with predefined dimensions and then combining that with another attention matrix to decode. This transformation unfolds through a sequence of collaborative layers, which deconstruct words into their corresponding numerical representations.

At its heart, a transformer model is a bridge between disparate linguistic structures, employing sophisticated neural network configurations to decode and manipulate human language input. An example of a transformer model is GPT-3, which ingests human language and generates text output.

- The guide to text-embedding-ada-002 model | OpenAI: text-embedding-ada-002: OpenAI's legacy text embedding model; average price/performance compared to text-embedding-3-large and text-embedding-3-small.

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- Massive Text Embedding Benchmark (MTEB): A standardized way to evaluate text embedding models across a range of tasks and languages, leading to better text embedding models for your app

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- The guide to text-embedding-3-small | OpenAI: text-embedding-3-small: OpenAIâs small text embedding model optimized for accuracy and efficiency with a lower cost.

- Evaluating Your Embedding Model - Zilliz blog: Review some practical examples to evaluate different text embedding models.