# Text Embedding with Transformers

*author: [Jael Gu](https://github.com/jaelgu)*

## Description

A text embedding operator takes a sentence, paragraph, or document in string as an input

and output an embedding vector in ndarray which captures the input's core semantic elements.

This operator is implemented with pre-trained models from [Huggingface Transformers](https://huggingface.co/docs/transformers).

## Code Example

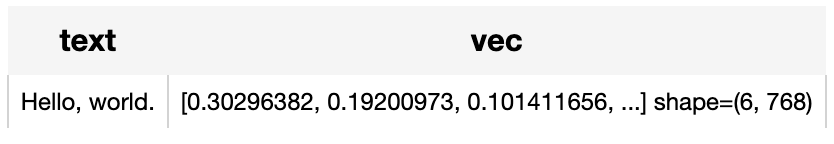

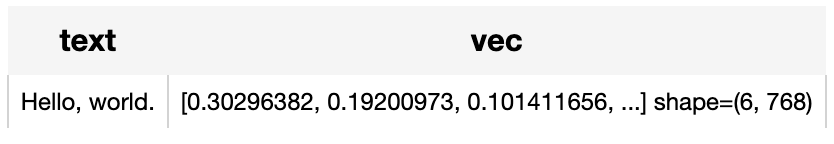

Use the pre-trained model 'distilbert-base-cased'

to generate a text embedding for the sentence "Hello, world.".

*Write a same pipeline with explicit inputs/outputs name specifications:*

- **option 1 (towhee>=0.9.0):**

```python

from towhee.dc2 import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec', ops.text_embedding.transformers(model_name='distilbert-base-cased'))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

```

- **option 2:**

```python

import towhee

(

towhee.dc['text'](["Hello, world."])

.text_embedding.transformers['text', 'vec'](model_name="distilbert-base-cased")

.show()

)

```

- **option 2:**

```python

import towhee

(

towhee.dc['text'](["Hello, world."])

.text_embedding.transformers['text', 'vec'](model_name="distilbert-base-cased")

.show()

)

```

## Factory Constructor

Create the operator via the following factory method:

***text_embedding.transformers(model_name=None)***

**Parameters:**

***model_name***: *str*

The model name in string, defaults to None.

If None, the operator will be initialized without specified model.

Supported model names:

Albert

- albert-base-v1

- albert-large-v1

- albert-xlarge-v1

- albert-xxlarge-v1

- albert-base-v2

- albert-large-v2

- albert-xlarge-v2

- albert-xxlarge-v2

Bart

- facebook/bart-large

Bert

- bert-base-cased

- bert-base-uncased

- bert-large-cased

- bert-large-uncased

- bert-base-multilingual-uncased

- bert-base-multilingual-cased

- bert-base-chinese

- bert-base-german-cased

- bert-large-uncased-whole-word-masking

- bert-large-cased-whole-word-masking

- bert-large-uncased-whole-word-masking-finetuned-squad

- bert-large-cased-whole-word-masking-finetuned-squad

- bert-base-cased-finetuned-mrpc

- bert-base-german-dbmdz-cased

- bert-base-german-dbmdz-uncased

- cl-tohoku/bert-base-japanese-whole-word-masking

- cl-tohoku/bert-base-japanese-char

- cl-tohoku/bert-base-japanese-char-whole-word-masking

- TurkuNLP/bert-base-finnish-cased-v1

- TurkuNLP/bert-base-finnish-uncased-v1

- wietsedv/bert-base-dutch-cased

BertGeneration

- google/bert_for_seq_generation_L-24_bbc_encoder

BigBird

- google/bigbird-roberta-base

- google/bigbird-roberta-large

- google/bigbird-base-trivia-itc

BigBirdPegasus

- google/bigbird-pegasus-large-arxiv

- google/bigbird-pegasus-large-pubmed

- google/bigbird-pegasus-large-bigpatent

CamemBert

- camembert-base

- Musixmatch/umberto-commoncrawl-cased-v1

- Musixmatch/umberto-wikipedia-uncased-v1

Canine

- google/canine-s

- google/canine-c

Convbert

- YituTech/conv-bert-base

- YituTech/conv-bert-medium-small

- YituTech/conv-bert-small

CTRL

- ctrl

DeBERTa

- microsoft/deberta-base

- microsoft/deberta-large

- microsoft/deberta-xlarge

- microsoft/deberta-base-mnli

- microsoft/deberta-large-mnli

- microsoft/deberta-xlarge-mnli

- microsoft/deberta-v2-xlarge

- microsoft/deberta-v2-xxlarge

- microsoft/deberta-v2-xlarge-mnli

- microsoft/deberta-v2-xxlarge-mnli

DistilBert

- distilbert-base-uncased

- distilbert-base-uncased-distilled-squad

- distilbert-base-cased

- distilbert-base-cased-distilled-squad

- distilbert-base-german-cased

- distilbert-base-multilingual-cased

- distilbert-base-uncased-finetuned-sst-2-english

Electral

- google/electra-small-generator

- google/electra-base-generator

- google/electra-large-generator

- google/electra-small-discriminator

- google/electra-base-discriminator

- google/electra-large-discriminator

Flaubert

- flaubert/flaubert_small_cased

- flaubert/flaubert_base_uncased

- flaubert/flaubert_base_cased

- flaubert/flaubert_large_cased

FNet

- google/fnet-base

- google/fnet-large

FSMT

- facebook/wmt19-ru-en

Funnel

- funnel-transformer/small

- funnel-transformer/small-base

- funnel-transformer/medium

- funnel-transformer/medium-base

- funnel-transformer/intermediate

- funnel-transformer/intermediate-base

- funnel-transformer/large

- funnel-transformer/large-base

- funnel-transformer/xlarge-base

- funnel-transformer/xlarge

GPT

- openai-gpt

- gpt2

- gpt2-medium

- gpt2-large

- gpt2-xl

- distilgpt2

- EleutherAI/gpt-neo-1.3B

- EleutherAI/gpt-j-6B

I-Bert

- kssteven/ibert-roberta-base

LED

- allenai/led-base-16384

MobileBert

- google/mobilebert-uncased

MPNet

- microsoft/mpnet-base

Nystromformer

- uw-madison/nystromformer-512

Reformer

- google/reformer-crime-and-punishment

Splinter

- tau/splinter-base

- tau/splinter-base-qass

- tau/splinter-large

- tau/splinter-large-qass

SqueezeBert

- squeezebert/squeezebert-uncased

- squeezebert/squeezebert-mnli

- squeezebert/squeezebert-mnli-headless

TransfoXL

- transfo-xl-wt103

XLM

- xlm-mlm-en-2048

- xlm-mlm-ende-1024

- xlm-mlm-enfr-1024

- xlm-mlm-enro-1024

- xlm-mlm-tlm-xnli15-1024

- xlm-mlm-xnli15-1024

- xlm-clm-enfr-1024

- xlm-clm-ende-1024

- xlm-mlm-17-1280

- xlm-mlm-100-1280

XLMRoberta

- xlm-roberta-base

- xlm-roberta-large

- xlm-roberta-large-finetuned-conll02-dutch

- xlm-roberta-large-finetuned-conll02-spanish

- xlm-roberta-large-finetuned-conll03-english

- xlm-roberta-large-finetuned-conll03-german

XLNet

- xlnet-base-cased

- xlnet-large-cased

Yoso

- uw-madison/yoso-4096

***checkpoint_path***: *str*

The path to local checkpoint, defaults to None.

If None, the operator will download and load pretrained model by `model_name` from Huggingface transformers.

***tokenizer***: *object*

The method to tokenize input text, defaults to None.

If None, the operator will use default tokenizer by `model_name` from Huggingface transformers.

## Interface

The operator takes a piece of text in string as input.

It loads tokenizer and pre-trained model using model name.

and then return text embedding in ndarray.

***\_\_call\_\_(txt)***

**Parameters:**

***txt***: *str*

The text in string.

**Returns**:

*numpy.ndarray*

The text embedding extracted by model.

***save_model(format='pytorch', path='default')***

Save model to local with specified format.

**Parameters:**

***format***: *str*

The format of saved model, defaults to 'pytorch'.

***path***: *str*

The path where model is saved to. By default, it will save model to the operator directory.

```python

from towhee import ops

op = ops.text_embedding.transformers(model_name='distilbert-base-cased').get_op()

op.save_model('onnx', 'test.onnx')

```

PosixPath('/Home/.towhee/operators/text-embedding/transformers/main/test.onnx')

***supported_model_names(format=None)***

Get a list of all supported model names or supported model names for specified model format.

**Parameters:**

***format***: *str*

The model format such as 'pytorch', 'torchscript'.

```python

from towhee import ops

op = ops.text_embedding.transformers().get_op()

full_list = op.supported_model_names()

onnx_list = op.supported_model_names(format='onnx')

print(f'Onnx-support/Total Models: {len(onnx_list)}/{len(full_list)}')

```

2022-12-13 16:25:15,916 - 140704500614336 - auto_transformers.py-auto_transformers:68 - WARNING: The operator is initialized without specified model.

Onnx-support/Total Models: 111/126

## Fine-tune

### Requirement

If you want to train this operator, besides dependency in requirements.txt, you need install these dependencies.

```python

! python -m pip install datasets evaluate scikit-learn

```

### Get start

We have prepared some most typical use of [finetune examples](https://github.com/towhee-io/examples/tree/main/fine_tune/6_train_language_modeling_tasks).

Simply speaking, you only need to construct an op instance and pass in some configurations to train the specified task.

```python

import towhee

bert_op = towhee.ops.text_embedding.transformers(model_name='bert-base-uncased').get_op()

data_args = {

'dataset_name': 'wikitext',

'dataset_config_name': 'wikitext-2-raw-v1',

}

training_args = {

'num_train_epochs': 3, # you can add epoch number to get a better metric.

'per_device_train_batch_size': 8,

'per_device_eval_batch_size': 8,

'do_train': True,

'do_eval': True,

'output_dir': './tmp/test-mlm',

'overwrite_output_dir': True

}

bert_op.train(task='mlm', data_args=data_args, training_args=training_args)

```

For more infos, refer to the [examples](https://github.com/towhee-io/examples/tree/main/fine_tune/6_train_language_modeling_tasks).

### Dive deep and customize your training

You can change the [training script](https://towhee.io/text-embedding/transformers/src/branch/main/train_clm_with_hf_trainer.py) in your customer way.

Or your can refer to the original [hugging face transformers training examples](https://github.com/huggingface/transformers/blob/main/examples/pytorch/language-modeling). - **option 2:**

```python

import towhee

(

towhee.dc['text'](["Hello, world."])

.text_embedding.transformers['text', 'vec'](model_name="distilbert-base-cased")

.show()

)

```

- **option 2:**

```python

import towhee

(

towhee.dc['text'](["Hello, world."])

.text_embedding.transformers['text', 'vec'](model_name="distilbert-base-cased")

.show()

)

```