# Video deduplication with Distill-and-Select

*author: Chen Zhang*

## Description

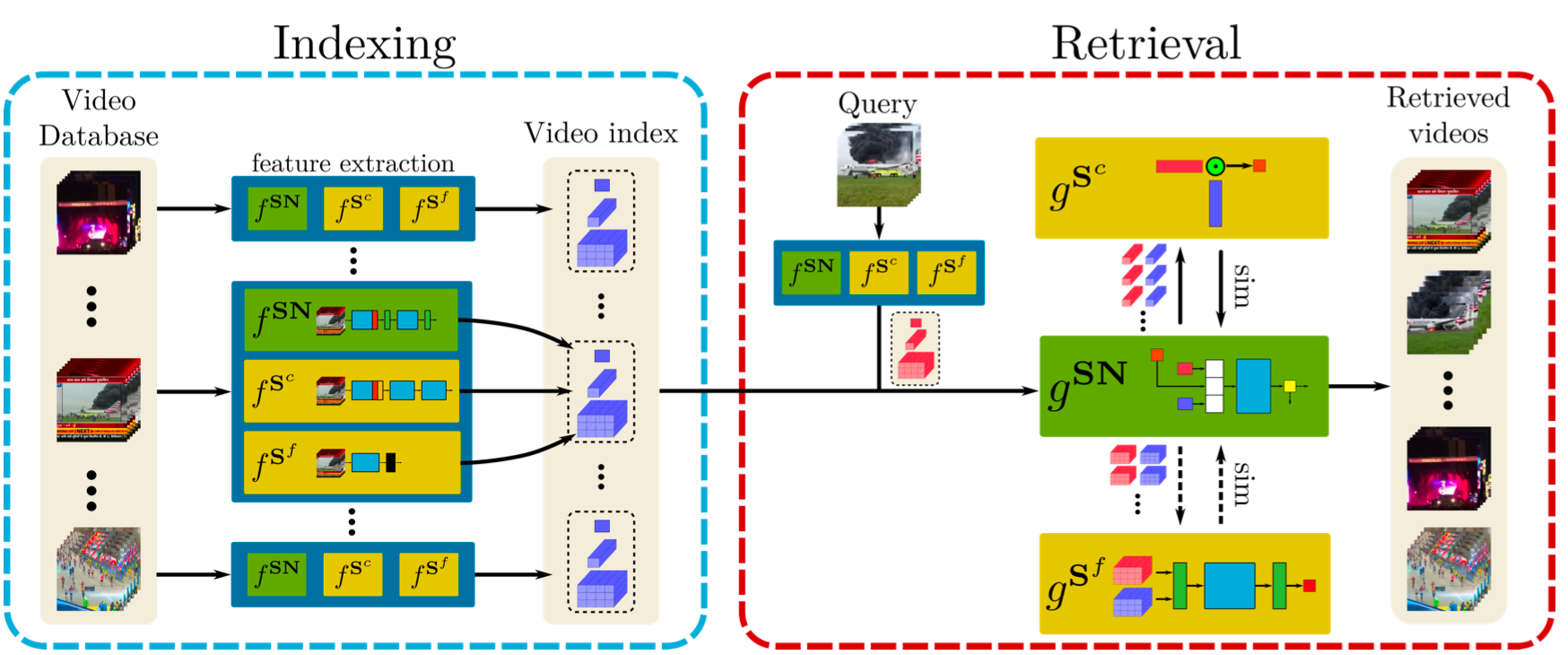

This operator is made for video deduplication task base on [DnS: Distill-and-Select for Efficient and Accurate Video Indexing and Retrieval](https://arxiv.org/abs/2106.13266).

Training with knowledge distillation method in large, unlabelled datasets, DnS learns: a) Student Networks at different retrieval performance and computational efficiency trade-offs and b) a Selection Network that at test time rapidly directs samples to the appropriate student to maintain both high retrieval performance and high computational efficiency.

## Code Example

Load a video from path './demo_video.flv' using ffmpeg operator to decode it.

Then use distill_and_select operator to get the output using the specified model.

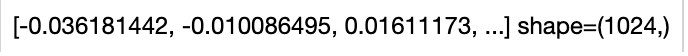

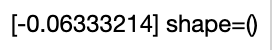

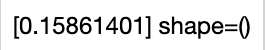

For fine-grained student model, get a 3d output with the temporal-dim information. For coarse-grained student model, get a 1d output representing the whole video. For selector model, get a scalar output.

*For feature_extractor model*:

```python

import towhee

towhee.dc(['./demo_video.flv']) \

.video_decode.ffmpeg(start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op(func=lambda x: [y for y in x]) \

.distill_and_select(model_name='feature_extractor') \

.show()

```

*For fg_att_student model*:

```python

import towhee

towhee.dc(['./demo_video.flv']) \

.video_decode.ffmpeg(start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op(func=lambda x: [y for y in x]) \

.distill_and_select(model_name='fg_att_student') \

.show()

```

*For fg_bin_student model*:

```python

import towhee

towhee.dc(['./demo_video.flv']) \

.video_decode.ffmpeg(start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op(func=lambda x: [y for y in x]) \

.distill_and_select(model_name='fg_bin_student') \

.show()

```

*For cg_student model*:

```python

import towhee

towhee.dc(['./demo_video.flv']) \

.video_decode.ffmpeg(start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op(func=lambda x: [y for y in x]) \

.distill_and_select(model_name='cg_student') \

.show()

```

*For selector_att model*:

```python

import towhee

towhee.dc(['./demo_video.flv']) \

.video_decode.ffmpeg(start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op(func=lambda x: [y for y in x]) \

.distill_and_select(model_name='selector_att') \

.show()

```

*For selector_bin model*:

```python

import towhee

towhee.dc(['./demo_video.flv']) \

.video_decode.ffmpeg(start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op(func=lambda x: [y for y in x]) \

.distill_and_select(model_name='selector_bin') \

.show()

```

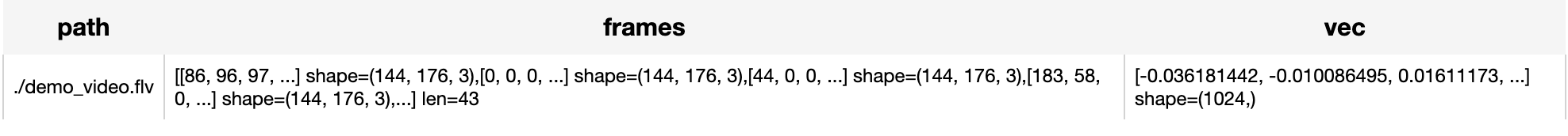

*Write a same pipeline with explicit inputs/outputs name specifications, take cg_student model for example:*

```python

import towhee

towhee.dc['path'](['./demo_video.flv']) \

.video_decode.ffmpeg['path', 'frames'](start_time=0.0, end_time=1000.0, sample_type='time_step_sample', args={'time_step': 1}) \

.runas_op['frames', 'frames'](func=lambda x: [y for y in x]) \

.distill_and_select['frames', 'vec'](model_name='cg_student') \

.show()

```

## Factory Constructor

Create the operator via the following factory method

***distill_and_select(model_name, \*\*kwargs)***

**Parameters:**

***model_name:*** *str*

Can be one of them:

`feature_extractor`: Feature Extractor only,

`fg_att_student`: Fine Grained Student with attention,

`fg_bin_student`: Fine Grained Student with binarization,

`cg_student`: Coarse Grained Student,

`selector_att`: Selector Network with attention,

`selector_bin`: Selector Network with binarization.

***model_weight_path:*** *str*

Default is None, download use the original pretrained weights.

***feature_extractor:*** *Union[str, nn.Module]*

`None`, 'default' or a pytorch nn.Module instance.

`None` means this operator don't support feature extracting from the video data and this operator process embedding feature as input.

'default' means using the original pretrained feature extracting weights and this operator can process video data as input.

Or you can pass in a nn.Module instance as a specified feature extractor.

Default is `default`.

***device:*** *str*

Model device, cpu or cuda.

## Interface

Get the output from your specified model.

**Parameters:**

***data:*** *List[towhee.types.VideoFrame]* or *Any*

The input type is List[VideoFrame] when using default feature_extractor, else the type for your customer feature_extractor.

**Returns:** *numpy.ndarray*

Output by specified model.

# More Resources

- [Vector Database Use Cases: Video Similarity Search - Zilliz](https://zilliz.com/vector-database-use-cases/video-similarity-search): Experience a 10x performance boost and unparalleled precision when your video similarity search system is powered by Zilliz Cloud.

- [How BIGO Optimizes Its Video Deduplication System with Milvus](https://zilliz.com/customers/bigo): How Milvus Transformed BIGO's Video Deduplication System for Optimal Throughput and User Experience

- [Building a Smart Video Deduplication System With Milvus - Zilliz blog](https://zilliz.com/learn/video-deduplication-system): Learn how to use the Milvus vector database to build an automated solution for identifying and filtering out duplicate video content from archive storage.

- [4 Steps to Building a Video Search System - Zilliz blog](https://zilliz.com/blog/building-video-search-system-with-milvus): Searching for videos by image with Milvus

- [Everything You Need to Know About Zero Shot Learning - Zilliz blog](https://zilliz.com/learn/what-is-zero-shot-learning): A comprehensive guide to Zero-Shot Learning, covering its methodologies, its relations with similarity search, and popular Zero-Shot Classification Models.

- [What is approximate nearest neighbor search (ANNS)?](https://zilliz.com/glossary/anns): Learn how to use Approximate nearest neighbor search (ANNS) for efficient nearest-neighbor search in large datasets.