towhee

/

text-splitter

copied

You can not select more than 25 topics

Topics must start with a letter or number, can include dashes ('-') and can be up to 35 characters long.

Readme

Files and versions

Updated 1 year ago

towhee

Text Splitter

author: shiyu22

Description

Text splitter is used to split text into chunk lists.

Refer to Text Splitters for the operation of splitting text.

Code Example

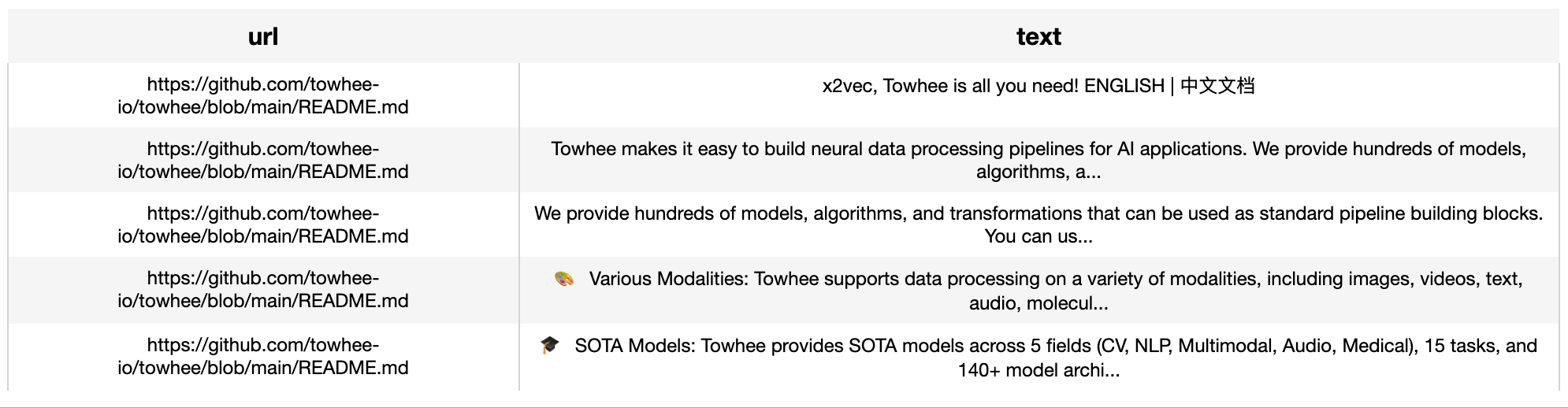

from towhee import pipe, ops, DataCollection

p = (

pipe.input('url')

.map('url', 'text', ops.text_loader())

.flat_map('text', 'text', ops.text_splitter())

.output('url', 'text')

)

res = p('https://github.com/towhee-io/towhee/blob/main/README.md')

DataCollection(res).show()

Factory Constructor

Create the operator via the following factory method

towhee.text_loader(type='RecursiveCharacter', chunk_size=300, **kwargs)

Parameters:

type: str

The type of splitter, defaults to 'RecursiveCharacter'. You can set this parameter in ['RecursiveCharacter', 'Markdown', 'PythonCode', 'Character', 'NLTK', 'Spacy', 'Tiktoken', 'HuggingFace'].

chunk_size: int

The maximum size of chunk, defaults to 300.

Interface

The operator split incoming the text and return chunks.

Parameters:

data: str

The text data.

Return: List[Document]

A list of the chunked document.

More Resources

- Experiment with 5 Chunking Strategies via LangChain for LLM - Zilliz blog: Explore the complexities of text chunking in retrieval augmented generation applications and learn how different chunking strategies impact the same piece of data.

- A Guide to Chunking Strategies for Retrieval Augmented Generation (RAG) - Zilliz blog: We explored various facets of chunking strategies within Retrieval-Augmented Generation (RAG) systems in this guide.

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- Key Strategies for Smart Retrieval Augmented Generation (RAG) - Zilliz blog: Three key strategies to get the most out of RAG: smart text chunking, iterating on different embedding models, and experimenting with different LLMs

- The guide to jina-embeddings-v2-small-en | Jina AI: jina-embeddings-v2-small-en: specialized text embedding model for long English documents; up to 8192 tokens.

- Massive Text Embedding Benchmark (MTEB): A standardized way to evaluate text embedding models across a range of tasks and languages, leading to better text embedding models for your app

- OpenAI text-embedding-3-large | Zilliz: Building GenAI applications with text-embedding-3-large model and Zilliz Cloud / Milvus

- The guide to jina-embeddings-v2-base-en | Jina AI: jina-embeddings-v2-base-en: specialized embedding model for English text and long documents; support sequences of up to 8192 tokens

- Text as Data, From Anywhere to Anywhere - Zilliz blog: Whether you prefer a no-code or minimal-code approach, Airbyte and PyAirbyte offer robust solutions for integrating both structured and unstructured data. AJ Steers' painted a good picture of the potential of these tools in revolutionizing data workflows.

|

| 14 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

3 years ago | |

README.md

README.md

|

4.5 KiB

|

1 year ago | |

__init__.py

__init__.py

|

114 B

|

3 years ago | |

requirements.txt

requirements.txt

|

46 B

|

2 years ago | |

result.png

result.png

|

149 KiB

|

3 years ago | |

splitter.py

splitter.py

|

2.0 KiB

|

3 years ago | |