copied

Readme

Files and versions

2.1 KiB

Video Alignment with Temporal Network

author: David Wang

Description

This operator can compare two ordered sequences, then detect the range which features from each sequence are computationally similar in order.

Code Example

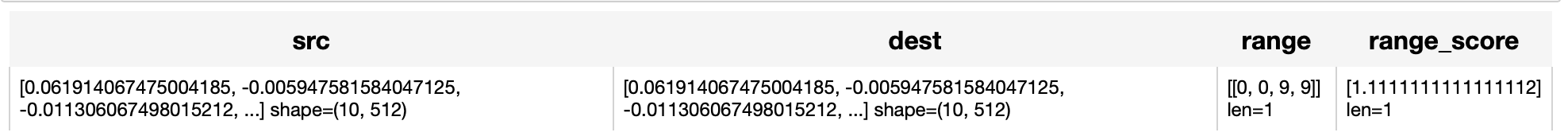

from towhee.dc2 import pipe, ops, DataCollection

import numpy as np

# simulate a video feature by 10 frames of 512d vectors.

videos_embeddings = np.random.randn(10,512)

videos_embeddings = videos_embeddings / np.linalg.norm(videos_embeddings,axis=1).reshape(10,-1)

p = (

pipe.input('src', 'dest') \

.map(('src', 'dest'), ('range', 'range_score'), ops.video_copy_detection.temporal_network()) \

.output('src', 'dest', 'range', 'range_score')

)

DataCollection(p(videos_embeddings, videos_embeddings)).show()

Factory Constructor

Create the operator via the following factory method

clip(model_name, modality) temporal_network(tn_max_step, tn_top_k, max_path, min_sim, min_length, max_iou)

Parameters:

tn_max_step: str

Max step range in TN.

tn_top_k: str

Top k frame similarity selection in TN.

max_path: str

Max loop for multiply segments detection.

min_sim: str

Min average similarity score for each aligned segment.

min_length: str

Min segment length.

max_iout: str

Max iou for filtering overlap segments (bbox).

Interface

A Temporal Network operator takes two numpy.ndarray(shape(N,D) N: number of features. D: dimension of features) and get the duplicated ranges and scores.

Parameters:

src_video_vec numpy.ndarray

Source video feature vectors.

dst_video_vec: numpy.ndarray

Destination video feature vectors.

Returns:

aligned_ranges: List[List[Int]]

The returned aligned range.

aligned_scores: List[float]

The returned similarity scores(length same as aligned_ranges).

2.1 KiB

Video Alignment with Temporal Network

author: David Wang

Description

This operator can compare two ordered sequences, then detect the range which features from each sequence are computationally similar in order.

Code Example

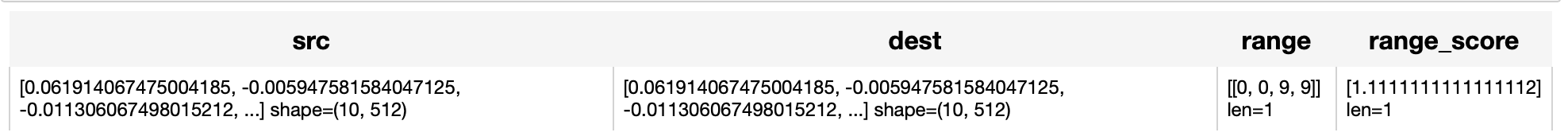

from towhee.dc2 import pipe, ops, DataCollection

import numpy as np

# simulate a video feature by 10 frames of 512d vectors.

videos_embeddings = np.random.randn(10,512)

videos_embeddings = videos_embeddings / np.linalg.norm(videos_embeddings,axis=1).reshape(10,-1)

p = (

pipe.input('src', 'dest') \

.map(('src', 'dest'), ('range', 'range_score'), ops.video_copy_detection.temporal_network()) \

.output('src', 'dest', 'range', 'range_score')

)

DataCollection(p(videos_embeddings, videos_embeddings)).show()

Factory Constructor

Create the operator via the following factory method

clip(model_name, modality) temporal_network(tn_max_step, tn_top_k, max_path, min_sim, min_length, max_iou)

Parameters:

tn_max_step: str

Max step range in TN.

tn_top_k: str

Top k frame similarity selection in TN.

max_path: str

Max loop for multiply segments detection.

min_sim: str

Min average similarity score for each aligned segment.

min_length: str

Min segment length.

max_iout: str

Max iou for filtering overlap segments (bbox).

Interface

A Temporal Network operator takes two numpy.ndarray(shape(N,D) N: number of features. D: dimension of features) and get the duplicated ranges and scores.

Parameters:

src_video_vec numpy.ndarray

Source video feature vectors.

dst_video_vec: numpy.ndarray

Destination video feature vectors.

Returns:

aligned_ranges: List[List[Int]]

The returned aligned range.

aligned_scores: List[float]

The returned similarity scores(length same as aligned_ranges).