copied

Readme

Files and versions

4.0 KiB

Video-Text Retrieval Embdding with CLIP4Clip

author: Chen Zhang

Description

This operator extracts features for video or text with CLIP4Clip which can generate embeddings for text and video by jointly training a video encoder and text encoder to maximize the cosine similarity.

Code Example

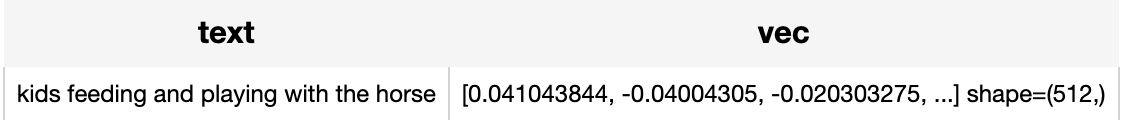

Read the text 'kids feeding and playing with the horse' to generate an text embedding.

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text') \

.map('text', 'vec', ops.video_text_embedding.clip4clip(model_name='clip_vit_b32', modality='text', device='cuda:1')) \

.output('text', 'vec')

)

DataCollection(p('kids feeding and playing with the horse')).show()

Load an video from path './demo_video.mp4' to generate an video embedding.

from towhee import pipe, ops, DataCollection

p = (

pipe.input('video_path') \

.map('video_path', 'flame_gen', ops.video_decode.ffmpeg(sample_type='uniform_temporal_subsample', args={'num_samples': 12})) \

.map('flame_gen', 'flame_list', lambda x: [y for y in x]) \

.map('flame_list', 'vec', ops.video_text_embedding.clip4clip(model_name='clip_vit_b32', modality='video', device='cuda:2')) \

.output('video_path', 'flame_list', 'vec')

)

DataCollection(p('./demo_video.mp4')).show()

Factory Constructor

Create the operator via the following factory method

clip4clip(model_name, modality, weight_path)

Parameters:

model_name: str

The model name of CLIP. Supported model names:

- clip_vit_b32

modality: str

Which modality(video or text) is used to generate the embedding.

weight_path: str

pretrained model weights path.

Interface

An video-text embedding operator takes a list of towhee image or string as input and generate an embedding in ndarray.

Parameters:

data: List[towhee.types.Image] or str

The data (list of image(which is uniform subsampled from a video) or text based on specified modality) to generate embedding.

Returns: numpy.ndarray

The data embedding extracted by model.

More Resources

- Vector Database Use Cases: Video Similarity Search - Zilliz: Experience a 10x performance boost and unparalleled precision when your video similarity search system is powered by Zilliz Cloud.

- CLIP Object Detection: Merging AI Vision with Language Understanding - Zilliz blog: CLIP Object Detection combines CLIP's text-image understanding with object detection tasks, allowing CLIP to locate and identify objects in images using texts.

- Supercharged Semantic Similarity Search in Production - Zilliz blog: Building a Blazing Fast, Highly Scalable Text-to-Image Search with CLIP embeddings and Milvus, the most advanced open-source vector database.

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- 4 Steps to Building a Video Search System - Zilliz blog: Searching for videos by image with Milvus

- From Text to Image: Fundamentals of CLIP - Zilliz blog: Search algorithms rely on semantic similarity to retrieve the most relevant results. With the CLIP model, the semantics of texts and images can be connected in a high-dimensional vector space. Read this simple introduction to see how CLIP can help you build a powerful text-to-image service.

4.0 KiB

Video-Text Retrieval Embdding with CLIP4Clip

author: Chen Zhang

Description

This operator extracts features for video or text with CLIP4Clip which can generate embeddings for text and video by jointly training a video encoder and text encoder to maximize the cosine similarity.

Code Example

Read the text 'kids feeding and playing with the horse' to generate an text embedding.

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text') \

.map('text', 'vec', ops.video_text_embedding.clip4clip(model_name='clip_vit_b32', modality='text', device='cuda:1')) \

.output('text', 'vec')

)

DataCollection(p('kids feeding and playing with the horse')).show()

Load an video from path './demo_video.mp4' to generate an video embedding.

from towhee import pipe, ops, DataCollection

p = (

pipe.input('video_path') \

.map('video_path', 'flame_gen', ops.video_decode.ffmpeg(sample_type='uniform_temporal_subsample', args={'num_samples': 12})) \

.map('flame_gen', 'flame_list', lambda x: [y for y in x]) \

.map('flame_list', 'vec', ops.video_text_embedding.clip4clip(model_name='clip_vit_b32', modality='video', device='cuda:2')) \

.output('video_path', 'flame_list', 'vec')

)

DataCollection(p('./demo_video.mp4')).show()

Factory Constructor

Create the operator via the following factory method

clip4clip(model_name, modality, weight_path)

Parameters:

model_name: str

The model name of CLIP. Supported model names:

- clip_vit_b32

modality: str

Which modality(video or text) is used to generate the embedding.

weight_path: str

pretrained model weights path.

Interface

An video-text embedding operator takes a list of towhee image or string as input and generate an embedding in ndarray.

Parameters:

data: List[towhee.types.Image] or str

The data (list of image(which is uniform subsampled from a video) or text based on specified modality) to generate embedding.

Returns: numpy.ndarray

The data embedding extracted by model.

More Resources

- Vector Database Use Cases: Video Similarity Search - Zilliz: Experience a 10x performance boost and unparalleled precision when your video similarity search system is powered by Zilliz Cloud.

- CLIP Object Detection: Merging AI Vision with Language Understanding - Zilliz blog: CLIP Object Detection combines CLIP's text-image understanding with object detection tasks, allowing CLIP to locate and identify objects in images using texts.

- Supercharged Semantic Similarity Search in Production - Zilliz blog: Building a Blazing Fast, Highly Scalable Text-to-Image Search with CLIP embeddings and Milvus, the most advanced open-source vector database.

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- 4 Steps to Building a Video Search System - Zilliz blog: Searching for videos by image with Milvus

- From Text to Image: Fundamentals of CLIP - Zilliz blog: Search algorithms rely on semantic similarity to retrieve the most relevant results. With the CLIP model, the semantics of texts and images can be connected in a high-dimensional vector space. Read this simple introduction to see how CLIP can help you build a powerful text-to-image service.