# Video-Text Retrieval Embdding with MDMMT

*author: Chen Zhang*

## Description

This operator extracts features for video or text with [MDMMT: Multidomain Multimodal Transformer for Video Retrieval](https://arxiv.org/pdf/2103.10699.pdf), which can generate embeddings for text and video by jointly training a video encoder and text encoder to maximize the cosine similarity.

## Code Example

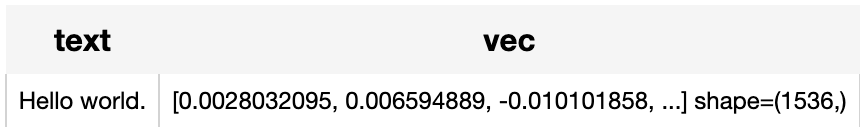

Read the text to generate a text embedding.

```python

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text') \

.map('text', 'vec', ops.video_text_embedding.mdmmt(modality='text', device='cuda:0')) \

.output('text', 'vec')

)

DataCollection(p('Hello world.')).show()

```

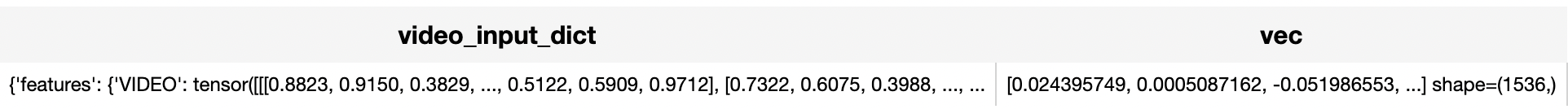

Load a video embeddings extracted from different upstream expert networks, such as video, RGB, audio.

```python

import torch

from towhee import pipe, ops, DataCollection

torch.manual_seed(42)

# features are embeddings extracted from the upstream models.

features = {

"VIDEO": torch.rand(30, 2048),

"CLIP": torch.rand(30, 512),

"tf_vggish": torch.rand(30, 128),

}

# features_t is the time series of the features, usually uniformly sampled.

features_t = {

"VIDEO": torch.linspace(1, 30, steps=30),

"CLIP": torch.linspace(1, 30, steps=30),

"tf_vggish": torch.linspace(1, 30, steps=30),

}

# features_ind is the mask of the features.

features_ind = {

"VIDEO": torch.as_tensor([1] * 25 + [0] * 5),

"CLIP": torch.as_tensor([1] * 25 + [0] * 5),

"tf_vggish": torch.as_tensor([1] * 25 + [0] * 5),

}

video_input_dict = {"features": features, "features_t": features_t, "features_ind": features_ind}

p = (

pipe.input('video_input_dict') \

.map('video_input_dict', 'vec', ops.video_text_embedding.mdmmt(modality='video', device='cuda:0')) \

.output('video_input_dict', 'vec')

)

DataCollection(p(video_input_dict)).show()

```

## Factory Constructor

Create the operator via the following factory method

***mdmmt(modality: str)***

**Parameters:**

***modality:*** *str*

Which modality(*video* or *text*) is used to generate the embedding.

***weight_path:*** *Optional[str]*

pretrained model weights path.

***device:*** *Optional[str]*

cpu or cuda.

***mmtvid_params:*** *Optional[dict]*

mmtvid model params for custom model.

***mmttxt_params:*** *Optional[dict]*

mmttxt model params for custom model.

## Interface

When video modality, load a video embeddings extracted from different upstream expert networks, such as video, RGB, audio.

When text modality, read the text to generate a text embedding.

**Parameters:**

***data:*** *dict* or *str*

The embedding dict extracted from different upstream expert networks or text, based on specified modality).

**Returns:** *numpy.ndarray*

The data embedding extracted by model.

# More Resources

- [Build Better Multimodal RAG Pipelines with FiftyOne, LlamaIndex, and Milvus - Zilliz blog](https://zilliz.com/blog/build-better-multimodal-rag-pipelines-with-fiftyone-llamaindex-and-milvus): Enhance the capabilities of multimodal systems by efficiently leveraging text and visual data for improved data retrieval and context-rich responses.

- [Vector Database Use Cases: Video Similarity Search - Zilliz](https://zilliz.com/vector-database-use-cases/video-similarity-search): Experience a 10x performance boost and unparalleled precision when your video similarity search system is powered by Zilliz Cloud.

- [Supercharged Semantic Similarity Search in Production - Zilliz blog](https://zilliz.com/learn/supercharged-semantic-similarity-search-in-production): Building a Blazing Fast, Highly Scalable Text-to-Image Search with CLIP embeddings and Milvus, the most advanced open-source vector database.

- [Tutorial: Diving into Text Embedding Models | Zilliz Webinar](https://zilliz.com/event/tutorial-text-embedding-models/success): Register for a free webinar diving into text embedding models in a presentation and tutorial

- [4 Steps to Building a Video Search System - Zilliz blog](https://zilliz.com/blog/building-video-search-system-with-milvus): Searching for videos by image with Milvus

- [Build a Multimodal Search System with Milvus - Zilliz blog](https://zilliz.com/blog/how-vector-dbs-are-revolutionizing-unstructured-data-search-ai-applications): Implementing a Multimodal Similarity Search System Using Milvus, Radient, ImageBind, and Meta-Chameleon-7b

- [Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar](https://zilliz.com/event/sparse-and-dense-embeddings-webinar): Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- [Sparse and Dense Embeddings: A Guide for Effective Information Retrieval with Milvus | Zilliz Webinar](https://zilliz.com/event/sparse-and-dense-embeddings-webinar/success): Zilliz webinar covering what sparse and dense embeddings are and when you'd want to use one over the other.

- [Training Text Embeddings with Jina AI - Zilliz blog](https://zilliz.com/blog/training-text-embeddings-with-jina-ai): In a recent talk by Bo Wang, he discussed the creation of Jina text embeddings for modern vector search and RAG systems. He also shared methodologies for training embedding models that effectively encode extensive information, along with guidance o