copied

Readme

Files and versions

4.2 KiB

Code & Text Embedding with CodeBert

author: Jael Gu

Description

A code search operator takes a text string of programming language or natural language as an input and returns an embedding vector in ndarray which captures the input's core semantic elements. This operator is implemented with pre-trained CodeBert or GraphCodeBert models from Huggingface Transformers.

Code Example

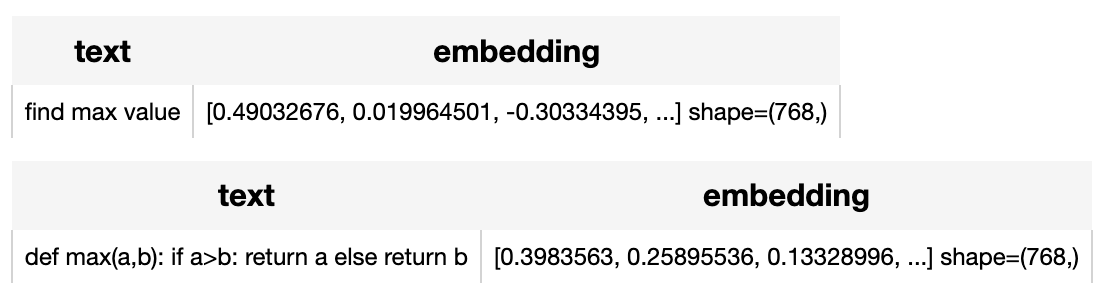

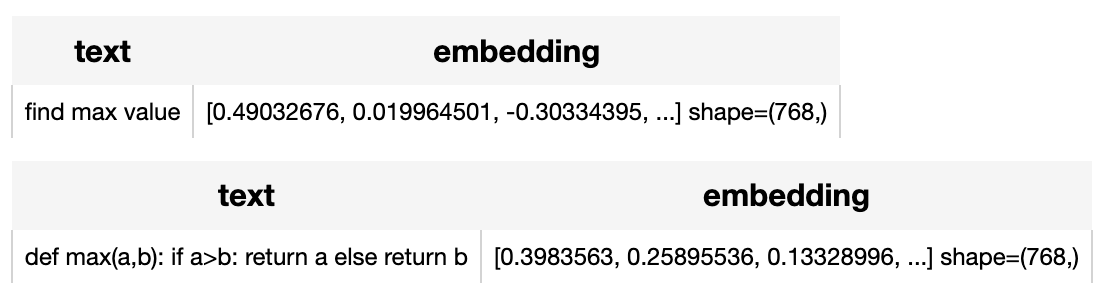

Use the pre-trained model "huggingface/CodeBERTa-small-v1" to generate text embeddings for given text description "return max value" and code "def max(a,b): if a>b: return a else return b".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'embedding', ops.code_search.codebert(model_name='huggingface/CodeBERTa-small-v1'))

.output('text', 'embedding')

)

DataCollection(p('find max value')).show()

DataCollection(p('def max(a,b): if a>b: return a else return b')).show()

Factory Constructor

Create the operator via the following factory method:

code_search.codebert(model_name="huggingface/CodeBERTa-small-v1")

Parameters:

model_name: str

The model name in string. The default model name is "huggingface/CodeBERTa-small-v1".

device: str

The device to run model inference. The default value is None, which enables GPU if cuda is available.

Supported model names:

- huggingface/CodeBERTa-small-v1

- microsoft/codebert-base

- microsoft/codebert-base-mlm

- mrm8488/codebert-base-finetuned-stackoverflow-ner

- microsoft/graphcodebert-base

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name. and then return an embedding in ndarray.

call(txt)

Parameters:

txt: str

The text string in programming language or natural language.

Returns:

numpy.ndarray

The text embedding generated by model, in shape of (dim,).

save_model(format="pytorch", path="default")

Save model to local with specified format.

Parameters:

format: str

The format of saved model, defaults to "pytorch".

format: path

The path where model is saved to. By default, it will save model to the operator directory.

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as "pytorch", "torchscript". The default value is None, which will return all supported model names.

More Resources

- The guide to text-embedding-ada-002 model | OpenAI: text-embedding-ada-002: OpenAI's legacy text embedding model; average price/performance compared to text-embedding-3-large and text-embedding-3-small.

- The guide to voyage-code-2 | Voyage AI: voyage-code-2: Voyage AI's text embedding model optimized for code retrieval (17% better than alternatives).

- What Is Semantic Search?: Semantic search is a search technique that uses natural language processing (NLP) and machine learning (ML) to understand the context and meaning behind a user's search query.

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Using Voyage AI's embedding models in Zilliz Cloud Pipelines - Zilliz blog: Assess the effectiveness of a RAG system implemented with various embedding models for code-related tasks.

4.2 KiB

Code & Text Embedding with CodeBert

author: Jael Gu

Description

A code search operator takes a text string of programming language or natural language as an input and returns an embedding vector in ndarray which captures the input's core semantic elements. This operator is implemented with pre-trained CodeBert or GraphCodeBert models from Huggingface Transformers.

Code Example

Use the pre-trained model "huggingface/CodeBERTa-small-v1" to generate text embeddings for given text description "return max value" and code "def max(a,b): if a>b: return a else return b".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'embedding', ops.code_search.codebert(model_name='huggingface/CodeBERTa-small-v1'))

.output('text', 'embedding')

)

DataCollection(p('find max value')).show()

DataCollection(p('def max(a,b): if a>b: return a else return b')).show()

Factory Constructor

Create the operator via the following factory method:

code_search.codebert(model_name="huggingface/CodeBERTa-small-v1")

Parameters:

model_name: str

The model name in string. The default model name is "huggingface/CodeBERTa-small-v1".

device: str

The device to run model inference. The default value is None, which enables GPU if cuda is available.

Supported model names:

- huggingface/CodeBERTa-small-v1

- microsoft/codebert-base

- microsoft/codebert-base-mlm

- mrm8488/codebert-base-finetuned-stackoverflow-ner

- microsoft/graphcodebert-base

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name. and then return an embedding in ndarray.

call(txt)

Parameters:

txt: str

The text string in programming language or natural language.

Returns:

numpy.ndarray

The text embedding generated by model, in shape of (dim,).

save_model(format="pytorch", path="default")

Save model to local with specified format.

Parameters:

format: str

The format of saved model, defaults to "pytorch".

format: path

The path where model is saved to. By default, it will save model to the operator directory.

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as "pytorch", "torchscript". The default value is None, which will return all supported model names.

More Resources

- The guide to text-embedding-ada-002 model | OpenAI: text-embedding-ada-002: OpenAI's legacy text embedding model; average price/performance compared to text-embedding-3-large and text-embedding-3-small.

- The guide to voyage-code-2 | Voyage AI: voyage-code-2: Voyage AI's text embedding model optimized for code retrieval (17% better than alternatives).

- What Is Semantic Search?: Semantic search is a search technique that uses natural language processing (NLP) and machine learning (ML) to understand the context and meaning behind a user's search query.

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Using Voyage AI's embedding models in Zilliz Cloud Pipelines - Zilliz blog: Assess the effectiveness of a RAG system implemented with various embedding models for code-related tasks.