copied

Readme

Files and versions

Updated 1 year ago

image-captioning

Fine-grained Image Captioning with CLIP Reward

author: David Wang

Description

This operator generates the caption with CLIPReward which describes the content of the given image. CLIPReward uses CLIP as a reward function and a simple finetuning strategy of the CLIP text encoder to impove grammar that does not require extra text annotation, thus towards to more descriptive and distinctive caption generation. This is an adaptation from j-min/CLIP-Caption-Reward.

Code Example

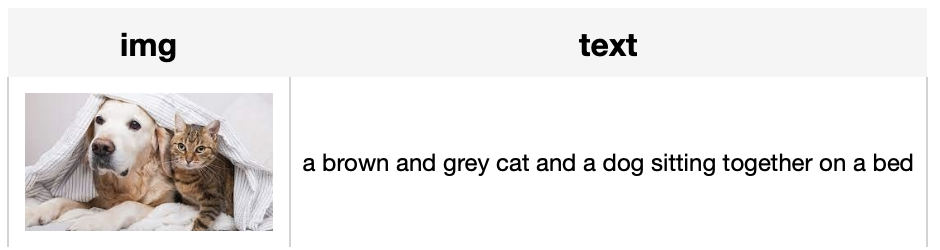

Load an image from path './animals.jpg' to generate the caption.

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('url')

.map('url', 'img', ops.image_decode.cv2_rgb())

.map('img', 'text', ops.image_captioning.clip_caption_reward(model_name='clipRN50_clips_grammar'))

.output('img', 'text')

)

DataCollection(p('./animals.jpg')).show()

Factory Constructor

Create the operator via the following factory method

clip_caption_reward(model_name)

Parameters:

model_name: str

The model name of CLIPReward. Supported model names:

- clipRN50_clips_grammar

Interface

An image captioning operator takes a towhee image as input and generate the correspoing caption.

Parameters:

img: towhee.types.Image (a sub-class of numpy.ndarray)

The image to generate caption.

Returns: str

The caption generated by model.

# More Resources

- [CLIP Object Detection: Merging AI Vision with Language Understanding - Zilliz blog](https://zilliz.com/learn/CLIP-object-detection-merge-AI-vision-with-language-understanding): CLIP Object Detection combines CLIP's text-image understanding with object detection tasks, allowing CLIP to locate and identify objects in images using texts.

- Supercharged Semantic Similarity Search in Production - Zilliz blog: Building a Blazing Fast, Highly Scalable Text-to-Image Search with CLIP embeddings and Milvus, the most advanced open-source vector database.

- The guide to clip-vit-base-patch32 | OpenAI: clip-vit-base-patch32: a CLIP multimodal model variant by OpenAI for image and text embedding.

- Exploring OpenAI CLIP: The Future of Multi-Modal AI Learning - Zilliz blog: Multimodal AI learning can get input and understand information from various modalities like text, images, and audio together, leading to a deeper understanding of the world. Learn more about OpenAI's CLIP (Contrastive Language-Image Pre-training), a popular multimodal model for text and image data.

- An LLM Powered Text to Image Prompt Generation with Milvus - Zilliz blog: An interesting LLM project powered by the Milvus vector database for generating more efficient text-to-image prompts.

- From Text to Image: Fundamentals of CLIP - Zilliz blog: Search algorithms rely on semantic similarity to retrieve the most relevant results. With the CLIP model, the semantics of texts and images can be connected in a high-dimensional vector space. Read this simple introduction to see how CLIP can help you build a powerful text-to-image service.

|

| 10 Commits | ||

|---|---|---|---|

captioning

captioning

|

3 years ago | ||

configs

configs

|

3 years ago | ||

data

data

|

3 years ago | ||

mclip

mclip

|

3 years ago | ||

utils

utils

|

3 years ago | ||

weights

weights

|

3 years ago | ||

.DS_Store

.DS_Store

|

8.0 KiB

|

3 years ago | |

.gitattributes

.gitattributes

|

1.1 KiB

|

3 years ago | |

.gitignore

.gitignore

|

7 B

|

3 years ago | |

README.md

README.md

|

3.6 KiB

|

1 year ago | |

__init__.py

__init__.py

|

729 B

|

3 years ago | |

cap.png

cap.png

|

12 KiB

|

3 years ago | |

clip_caption_reward.py

clip_caption_reward.py

|

5.5 KiB

|

3 years ago | |

requirements.txt

requirements.txt

|

52 B

|

3 years ago | |

tabular.png

tabular.png

|

94 KiB

|

3 years ago | |

transformer_model.py

transformer_model.py

|

14 KiB

|

3 years ago | |