copied

Readme

Files and versions

Updated 1 year ago

image-embedding

Image Embedding with Timm

author: Jael Gu, Filip

Description

An image embedding operator generates a vector given an image. This operator extracts features for image with pre-trained models provided by Timm. Timm is a deep-learning library developed by Ross Wightman, who maintains SOTA deep-learning models and tools in computer vision.

Code Example

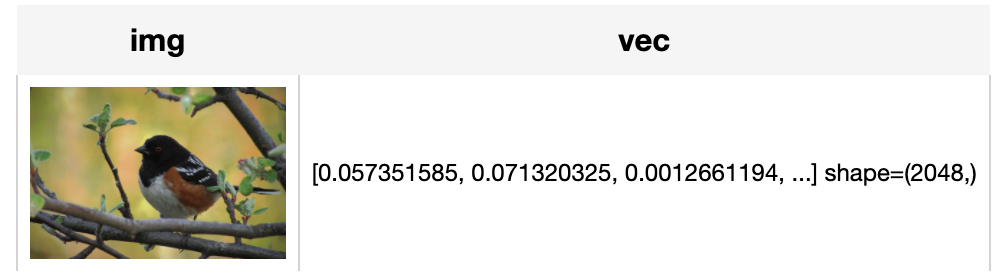

Load an image from path './towhee.jpeg' and use the pre-trained ResNet50 model ('resnet50') to generate an image embedding.

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'img', ops.image_decode())

.map('img', 'vec', ops.image_embedding.timm(model_name='resnet50'))

.output('img', 'vec')

)

DataCollection(p('towhee.jpeg')).show()

Factory Constructor

Create the operator via the following factory method:

image_embedding.timm(model_name='resnet34', num_classes=1000, skip_preprocess=False)

Parameters:

model_name: str

The model name in string. The default value is "resnet34". Refer to Timm Docs to get a full list of supported models.

num_classes: int

The number of classes. The default value is 1000. It is related to model and dataset.

skip_preprocess: bool

The flag to control whether to skip image pre-process. The default value is False. If set to True, it will skip image preprocessing steps (transforms). In this case, input image data must be prepared in advance in order to properly fit the model.

Interface

An image embedding operator takes a towhee image as input. It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

Parameters:

data: towhee.types.Image

The decoded image data in towhee Image (a subset of numpy.ndarray).

Returns: numpy.ndarray

An image embedding generated by model, in shape of (feature_dim,).

save_model(format='pytorch', path='default')

Save model to local with specified format.

Parameters:

format: str

The format of saved model, defaults to 'pytorch'.

path: str

The path where model is saved to. By default, it will save model to the operator directory.

from towhee import ops

op = ops.image_embedding.timm(model_name='resnet50').get_op()

op.save_model('onnx', 'test.onnx')

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as 'pytorch', 'torchscript'.

from towhee import ops

op = ops.image_embedding.timm().get_op()

full_list = op.supported_model_names()

onnx_list = op.supported_model_names(format='onnx')

print(f'Onnx-support/Total Models: {len(onnx_list)}/{len(full_list)}')

2022-12-19 16:32:37,933 - 140704422594752 - timm_image.py-timm_image:88 - WARNING: The operator is initialized without specified model.

Onnx-support/Total Models: 715/759

Fine-tune

To fine-tune this operator, please refer to this example guide.

Towhee Serve

Models which is supported the towhee.serve.

Model List

| models | models | models |

|---|---|---|

| adv_inception_v3 | bat_resnext26ts | beit_base_patch16_224 |

| beit_base_patch16_224_in22k | beit_base_patch16_384 | beit_large_patch16_224 |

| beit_large_patch16_224_in22k | beit_large_patch16_384 | beit_large_patch16_512 |

| botnet26t_256 | cait_m36_384 | cait_m48_448 |

| cait_s24_224 | cait_s24_384 | cait_s36_384 |

| cait_xs24_384 | cait_xxs24_224 | cait_xxs24_384 |

| cait_xxs36_224 | cait_xxs36_384 | coat_lite_mini |

| coat_lite_small | coat_lite_tiny | convit_base |

| convit_small | convit_tiny | convmixer_768_32 |

| convmixer_1024_20_ks9_p14 | convmixer_1536_20 | convnext_base |

| convnext_base_384_in22ft1k | convnext_base_in22ft1k | convnext_base_in22k |

| convnext_large | convnext_large_384_in22ft1k | convnext_large_in22ft1k |

| convnext_large_in22k | convnext_small | convnext_small_384_in22ft1k |

| convnext_small_in22ft1k | convnext_small_in22k | convnext_tiny |

| convnext_tiny_384_in22ft1k | convnext_tiny_hnf | convnext_tiny_in22ft1k |

| convnext_tiny_in22k | convnext_xlarge_384_in22ft1k | convnext_xlarge_in22ft1k |

| convnext_xlarge_in22k | cs3darknet_focus_l | cs3darknet_focus_m |

| cs3darknet_l | cs3darknet_m | cspdarknet53 |

| cspresnet50 | cspresnext50 | darknet53 |

| deit3_base_patch16_224 | deit3_base_patch16_224_in21ft1k | deit3_base_patch16_384 |

| deit3_base_patch16_384_in21ft1k | deit3_huge_patch14_224 | deit3_huge_patch14_224_in21ft1k |

| deit3_large_patch16_224 | deit3_large_patch16_224_in21ft1k | deit3_large_patch16_384 |

| deit3_large_patch16_384_in21ft1k | deit3_small_patch16_224 | deit3_small_patch16_224_in21ft1k |

| deit3_small_patch16_384 | deit3_small_patch16_384_in21ft1k | deit_base_distilled_patch16_224 |

| deit_base_distilled_patch16_384 | deit_base_patch16_224 | deit_base_patch16_384 |

| deit_small_distilled_patch16_224 | deit_small_patch16_224 | deit_tiny_distilled_patch16_224 |

| deit_tiny_patch16_224 | densenet121 | densenet161 |

| densenet169 | densenet201 | densenetblur121d |

| dla34 | dla46_c | dla46x_c |

| dla60 | dla60_res2net | dla60_res2next |

| dla60x | dla60x_c | dla102 |

| dla102x | dla102x2 | dla169 |

| dm_nfnet_f0 | dm_nfnet_f1 | dm_nfnet_f2 |

| dm_nfnet_f3 | dm_nfnet_f4 | dm_nfnet_f5 |

| dm_nfnet_f6 | dpn68 | dpn68b |

| dpn92 | dpn98 | dpn107 |

| dpn131 | eca_botnext26ts_256 | eca_halonext26ts |

| eca_nfnet_l0 | eca_nfnet_l1 | eca_nfnet_l2 |

| eca_resnet33ts | eca_resnext26ts | ecaresnet26t |

| ecaresnet50t | ecaresnet269d | edgenext_small |

| edgenext_small_rw | edgenext_x_small | edgenext_xx_small |

| efficientnet_b0 | efficientnet_b1 | efficientnet_b2 |

| efficientnet_b3 | efficientnet_b4 | efficientnet_el |

| efficientnet_el_pruned | efficientnet_em | efficientnet_es |

| efficientnet_es_pruned | efficientnet_lite0 | efficientnetv2_rw_m |

| efficientnetv2_rw_s | efficientnetv2_rw_t | ens_adv_inception_resnet_v2 |

| ese_vovnet19b_dw | ese_vovnet39b | fbnetc_100 |

| fbnetv3_b | fbnetv3_d | fbnetv3_g |

| gc_efficientnetv2_rw_t | gcresnet33ts | gcresnet50t |

| gcresnext26ts | gcresnext50ts | gernet_l |

| gernet_m | gernet_s | ghostnet_100 |

| gluon_inception_v3 | gluon_resnet18_v1b | gluon_resnet34_v1b |

| gluon_resnet50_v1b | gluon_resnet50_v1c | gluon_resnet50_v1d |

| gluon_resnet50_v1s | gluon_resnet101_v1b | gluon_resnet101_v1c |

| gluon_resnet101_v1d | gluon_resnet101_v1s | gluon_resnet152_v1b |

| gluon_resnet152_v1c | gluon_resnet152_v1d | gluon_resnet152_v1s |

| gluon_resnext50_32x4d | gluon_resnext101_32x4d | gluon_resnext101_64x4d |

| gluon_senet154 | gluon_seresnext50_32x4d | gluon_seresnext101_32x4d |

| gluon_seresnext101_64x4d | gluon_xception65 | gmlp_s16_224 |

| halo2botnet50ts_25 | ||

| halo2botnet50ts_256 | halonet26t | halonet50ts |

| haloregnetz_b | hardcorenas_a | hardcorenas_b |

| hardcorenas_c | hardcorenas_d | hardcorenas_e |

| hrnet_w18 | hrnet_w18_small | hrnet_w18_small_v2 |

| hrnet_w30 | hrnet_w32 | hrnet_w40 |

| hrnet_w44 | hrnet_w48 | hrnet_w64 |

| ig_resnext101_32x8d | ig_resnext101_32x16d | ig_resnext101_32x32d |

| ig_resnext101_32x48d | inception_resnet_v2 | inception_v3 |

| inception_v4 | jx_nest_base | jx_nest_small |

| jx_nest_tiny | lambda_resnet26rpt_256 | lambda_resnet26t |

| lambda_resnet50ts | lamhalobotnet50ts_256 | lcnet_050 |

| lcnet_075 | lcnet_100 | legacy_seresnet18 |

| legacy_seresnet34 | legacy_seresnet50 | legacy_seresnet101 |

| legacy_seresnet152 | legacy_seresnext26_32x4d | levit_128 |

| levit_128s | levit_192 | levit_256 |

| levit_384 | mixer_b16_224 | mixer_b16_224_in21k |

| mixer_b16_224_miil | mixer_b16_224_miil_in21k | mixer_l16_224 |

| mixer_l16_224_in21k | mixnet_l | mixnet_m |

| mixnet_s | mixnet_xl | mnasnet_100 |

| mnasnet_small | mobilenetv2_050 | mobilenetv2_100 |

| mobilenetv2_110d | mobilenetv2_120d | mobilenetv2_140 |

| mobilenetv3_large_100 | mobilenetv3_large_100_miil | mobilenetv3_large_100_miil_in21k |

| mobilenetv3_rw | mobilenetv3_small_050 | mobilenetv3_small_075 |

| mobilenetv3_small_100 | mobilevit_s | mobilevit_xs |

| mobilevit_xxs | mobilevitv2_050 | mobilevitv2_075 |

| mobilevitv2_100 | mobilevitv2_125 | mobilevitv2_150 |

| mobilevitv2_150_384_in22ft1k | mobilevitv2_150_in22ft1k | mobilevitv2_175 |

| mobilevitv2_175_384_in22ft1k | mobilevitv2_175_in22ft1k | mobilevitv2_200 |

| mobilevitv2_200_384_in22ft1k | mobilevitv2_200_in22ft1k | nf_regnet_b1 |

| nf_resnet50 | nfnet_l0 | pit_b_224 |

| pit_b_distilled_224 | pit_s_224 | pit_s_distilled_224 |

| pit_ti_224 | pit_ti_distilled_224 | pit_xs_224 |

| pit_xs_distilled_224 | pnasnet5large | poolformer_m36 |

| poolformer_m48 | poolformer_s12 | poolformer_s24 |

| poolformer_s36 | regnetv_040 | regnetv_064 |

| regnetx_002 | regnetx_004 | regnetx_006 |

| regnetx_008 | regnetx_016 | regnetx_032 |

| regnetx_040 | regnetx_064 | regnetx_080 |

| regnetx_120 | regnetx_160 | regnetx_320 |

| regnety_002 | regnety_004 | regnety_006 |

| regnety_008 | regnety_016 | regnety_032 |

| regnety_040 | regnety_064 | regnety_080 |

| regnety_120 | regnety_160 | regnety_320 |

| regnetz_040 | regnetz_040h | regnetz_b16 |

| regnetz_c16 | regnetz_c16_evos | regnetz_d8 |

| regnetz_d8_evos | regnetz_d32 | regnetz_e8 |

| repvgg_a2 | repvgg_b0 | repvgg_b1 |

| repvgg_b1g4 | repvgg_b2 | repvgg_b2g4 |

| repvgg_b3 | repvgg_b3g4 | res2net50_14w_8s |

| res2net50_26w_4s | res2net50_26w_6s | res2net50_26w_8s |

| res2net50_48w_2s | res2net101_26w_4s | res2next50 |

| resmlp_12_224 | resmlp_12_224_dino | resmlp_12_distilled_224 |

| resmlp_24_224 | resmlp_24_224_dino | resmlp_24_distilled_224 |

| resmlp_36_224 | resmlp_36_distilled_224 | resmlp_big_24_224 |

| resmlp_big_24_224_in22ft1k | resnest14d | resnest26d |

| resnest50d | resnest50d_1s4x24d | resnest50d_4s2x40d |

| resnest101e | resnest200e | resnet10t |

| resnet14t | resnet18 | resnet18d |

| resnet26 | resnet26d | resnet26t |

| resnet32ts | resnet33ts | resnet34 |

| resnet34d | resnet50 | resnet50_gn |

| resnet50d | resnet51q | resnet61q |

| resnet101 | resnet101d | resnet152 |

| resnet152d | resnet200d | resnetaa50 |

| resnetblur50 | resnetrs50 |

More Resources

- How to Get the Right Vector Embeddings - Zilliz blog: A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- What Are Vector Embeddings?: Learn the definition of vector embeddings, how to create vector embeddings, and more.

- Embedding Inference at Scale for RAG Applications with Ray Data and Milvus - Zilliz blog: This blog showed how to use Ray Data and Milvus Bulk Import features to significantly speed up the vector generation and efficiently batch load them into a vector database.

- Image Embeddings for Enhanced Image Search - Zilliz blog: Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.

- Enhancing Information Retrieval with Sparse Embeddings | Zilliz Learn - Zilliz blog: Explore the inner workings, advantages, and practical applications of learned sparse embeddings with the Milvus vector database

- An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog: In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.

|

| 113 Commits | ||

|---|---|---|---|

benchmark

benchmark

|

3 years ago | ||

.gitattributes

.gitattributes

|

1.1 KiB

|

4 years ago | |

README.md

README.md

|

12 KiB

|

1 year ago | |

__init__.py

__init__.py

|

680 B

|

4 years ago | |

requirements.txt

requirements.txt

|

24 B

|

3 years ago | |

result.png

result.png

|

80 KiB

|

3 years ago | |

test_onnx.py

test_onnx.py

|

3.2 KiB

|

3 years ago | |

test_torchscript.py

test_torchscript.py

|

2.5 KiB

|

4 years ago | |

timm_image.py

timm_image.py

|

11 KiB

|

2 years ago | |

towhee.jpeg

towhee.jpeg

|

49 KiB

|

4 years ago | |