copied

Readme

Files and versions

Updated 1 year ago

sentence-embedding

Sentence Embedding with Sentence Transformers

author: Jael Gu

Description

This operator takes a sentence or a list of sentences in string as input. It generates an embedding vector in numpy.ndarray for each sentence, which captures the input sentence's core semantic elements. This operator is implemented with pre-trained models from Sentence Transformers.

Code Example

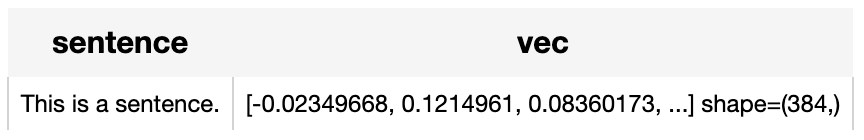

Use the pre-trained model "all-MiniLM-L12-v2" to generate a text embedding for the sentence "This is a sentence.".

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('sentence')

.map('sentence', 'vec', ops.sentence_embedding.sbert(model_name='all-MiniLM-L12-v2'))

.output('sentence', 'vec')

)

DataCollection(p('This is a sentence.')).show()

Factory Constructor

Create the operator via the following factory method:

text_embedding.sbert(model_name='all-MiniLM-L12-v2')

Parameters:

model_name: str

The model name in string. Supported model names:

Refer to SBert Doc.

Please note that only models listed supported_model_names are tested.

You can refer to Towhee Pipeline for model performance.

device: str

The device to run model, defaults to None. If None, it will use 'cuda' automatically when cuda is available.

Interface

The operator takes a sentence or a list of sentences in string as input. It loads tokenizer and pre-trained model using model name, and then returns text embedding in numpy.ndarray.

call(txt)

Parameters:

txt: Union[List[str], str]

A sentence or a list of sentences in string.

Returns:

Union[List[numpy.ndarray], numpy.ndarray]

If input is a sentence in string, then it returns an embedding vector of shape (dim,) in numpy.ndarray. If input is a list of sentences, then it returns a list of embedding vectors, each of which a numpy.ndarray in shape of (dim,).

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as 'pytorch', defaults to None. If None, it will return a full list of supported model names.

from towhee import ops

op = ops.sentence_embedding.sbert().get_op()

full_list = op.supported_model_names()

Fine-tune

Get started

In this example, we fine-tune operator in Semantic Textual Similarity (STS) task, which assigns a score on the similarity of two texts. We use the STSbenchmark as training data to fine-tune.

We only need to construct an op instance and pass in some configurations to train the specified task.

import towhee

import os

from sentence_transformers import util

op = towhee.ops.sentence_embedding.sbert(model_name='nli-distilroberta-base-v2').get_op()

sts_dataset_path = 'datasets/stsbenchmark.tsv.gz'

if not os.path.exists(sts_dataset_path):

util.http_get('https://sbert.net/datasets/stsbenchmark.tsv.gz', sts_dataset_path)

model_save_path = './output'

training_config = {

'sts_dataset_path': sts_dataset_path,

'train_batch_size': 16,

'num_epochs': 4,

'model_save_path': model_save_path

}

op.train(training_config)

### Load trained weights

### You just need to init a new operator with the trained folder under `model_save_path`.

model_path = os.path.join(model_save_path, os.listdir(model_save_path)[-1])

new_op = towhee.ops.sentence_embedding.sbert(model_name=model_path).get_op()

Dive deep and customize your training

You can change the training script in your customer way. Or your can refer to the original sbert training guide and code example for more information.

More Resources

- All-Mpnet-Base-V2: Enhancing Sentence Embedding with AI - Zilliz blog: Delve into one of the deep learning models that has played a significant role in the development of sentence embedding: MPNet.

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- Transforming Text: The Rise of Sentence Transformers in NLP - Zilliz blog: Everything you need to know about the Transformers model, exploring its architecture, implementation, and limitations

- Training Your Own Text Embedding Model - Zilliz blog: Explore how to train your text embedding model using the

sentence-transformerslibrary and generate our training data by leveraging a pre-trained LLM. - What Are Vector Embeddings?: Learn the definition of vector embeddings, how to create vector embeddings, and more.

- Evaluating Your Embedding Model - Zilliz blog: Review some practical examples to evaluate different text embedding models.

- Neural Networks and Embeddings for Language Models - Zilliz blog: Exploring neural network language models, specifically recurrent neural networks, and taking a sneak peek at how embeddings are generated.

- Training Text Embeddings with Jina AI - Zilliz blog: In a recent talk by Bo Wang, he discussed the creation of Jina text embeddings for modern vector search and RAG systems. He also shared methodologies for training embedding models that effectively encode extensive information, along with guidance o

|

| 22 Commits | ||

|---|---|---|---|

benchmark

benchmark

|

3 years ago | ||

.gitattributes

.gitattributes

|

1.1 KiB

|

3 years ago | |

README.md

README.md

|

6.1 KiB

|

1 year ago | |

__init__.py

__init__.py

|

699 B

|

3 years ago | |

requirements.txt

requirements.txt

|

27 B

|

3 years ago | |

result.png

result.png

|

6.0 KiB

|

3 years ago | |

s_bert.py

s_bert.py

|

10 KiB

|

2 years ago | |

test_onnx.py

test_onnx.py

|

3.2 KiB

|

3 years ago | |

train_sts_task.py

train_sts_task.py

|

3.4 KiB

|

3 years ago | |