copied

Readme

Files and versions

Updated 3 years ago

sentence-embedding

Sentence Embedding with Transformers

author: Jael Gu

Description

A sentence embedding operator generates one embedding vector in ndarray for each input text. The embedding represents the semantic information of the whole input text as one vector. This operator is implemented with pre-trained models from Huggingface Transformers.

Code Example

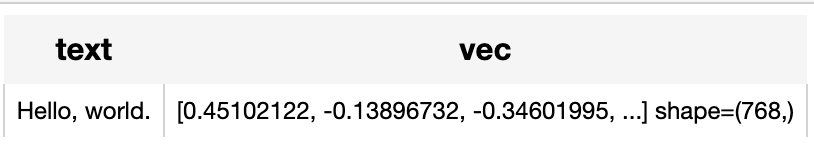

Use the pre-trained model 'sentence-transformers/paraphrase-albert-small-v2' to generate an embedding for the sentence "Hello, world.".

Write a same pipeline with explicit inputs/outputs name specifications:

- option 1 (towhee>=0.9.0):

from towhee.dc2 import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec',

ops.sentence_embedding.transformers(model_name='sentence-transformers/paraphrase-albert-small-v2'))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

- option 2:

import towhee

(

towhee.dc['text'](['Hello, world.'])

.sentence_embedding.transformers['text', 'vec'](

model_name='sentence-transformers/paraphrase-albert-small-v2')

.show()

)

Factory Constructor

Create the operator via the following factory method:

sentence_embedding.transformers(model_name=None)

Parameters:

model_name: str

The model name in string, defaults to None. If None, the operator will be initialized without specified model.

Supported model names: refer to supported_model_names below.

checkpoint_path: str

The path to local checkpoint, defaults to None.

If None, the operator will download and load pretrained model by model_name from Huggingface transformers.

tokenizer: object

The method to tokenize input text, defaults to None.

If None, the operator will use default tokenizer by model_name from Huggingface transformers.

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name, and then return a text emabedding in numpy.ndarray.

__call__(txt)

Parameters:

data: Union[str, list]

The text in string or a list of texts.

Returns:

numpy.ndarray or list

The text embedding (or token embeddings) extracted by model.

If data is string, the operator returns an embedding in numpy.ndarray with shape of (dim,).

If data is a list, the operator returns a list of embedding(s) with length of input list.

save_model(format='pytorch', path='default')

Save model to local with specified format.

Parameters:

format: str

The format to export model as, such as 'pytorch', 'torchscript', 'onnx', defaults to 'pytorch'.

path: str

The path where exported model is saved to.

By default, it will save model to saved directory under the operator cache.

from towhee import ops

op = ops.sentence_embedding.transformers(model_name='sentence-transformers/paraphrase-albert-small-v2').get_op()

op.save_model('onnx', 'test.onnx')

PosixPath('/Home/.towhee/operators/sentence-embedding/transformers/main/test.onnx')

supported_model_names(format=None)

Get a list of all supported model names or supported model names for specified model format.

Parameters:

format: str

The model format such as 'pytorch', 'torchscript', 'onnx'.

from towhee import ops

op = ops.sentence_embedding.transformers().get_op()

full_list = op.supported_model_names()

onnx_list = op.supported_model_names(format='onnx')

|

| 8 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

3 years ago | |

README.md

README.md

|

3.6 KiB

|

3 years ago | |

__init__.py

__init__.py

|

723 B

|

3 years ago | |

auto_transformers.py

auto_transformers.py

|

9.4 KiB

|

3 years ago | |

requirements.txt

requirements.txt

|

56 B

|

3 years ago | |

result.png

result.png

|

5.7 KiB

|

3 years ago | |

test_onnx.py

test_onnx.py

|

3.5 KiB

|

3 years ago | |