copied

Readme

Files and versions

Updated 1 year ago

text-embedding

Text Embedding with Longformer

author: Kyle He

Desription

This operator uses Longformer to convert long text to embeddings.

The Longformer model was presented in Longformer: The Long-Document Transformer by Iz Beltagy, Matthew E. Peters, Arman Cohan[1].

Longformer models were proposed in “[Longformer: The Long-Document Transformer][2].

Transformer-based models are unable to process long sequences due to their self-attention operation, which scales quadratically with the sequence length. To address this limitation, we introduce the Longformer with an attention mechanism that scales linearly with sequence length, making it easy to process documents of thousands of tokens or longer[2].

References

[2].https://arxiv.org/pdf/2004.05150.pdf

Code Example

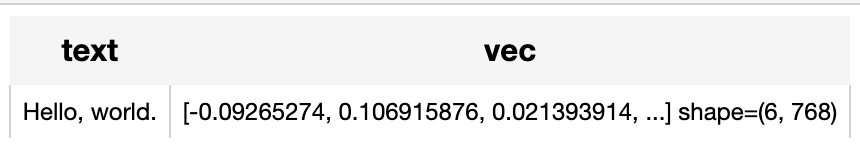

Use the pre-trained model "allenai/longformer-base-4096" to generate a text embedding for the sentence "Hello, world.".

Write the pipeline:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec', ops.text_embedding.longformer(model_name="allenai/longformer-base-4096"))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

Factory Constructor

Create the operator via the following factory method:

text_embedding.longformer(model_name="allenai/longformer-base-4096")

Parameters:

model_name: str

The model name in string. The default value is "allenai/longformer-base-4096".

Supported model names:

- allenai/longformer-base-4096

- allenai/longformer-large-4096

- allenai/longformer-large-4096-finetuned-triviaqa

- allenai/longformer-base-4096-extra.pos.embd.only

- allenai/longformer-large-4096-extra.pos.embd.only

global_attention_mask: torch.Tensor

defaults to None.

pooler_output: bool

The flag controllling whether to return outputs with pooled features, defaults to False. The default output is in shape of (num_tokens, dim) for each input text. If True, then the output will be a vector in (dim,) for each input text.

Interface

The operator takes a text in string as input. It loads tokenizer and pre-trained model using model name and then return text embedding in ndarray.

Parameters:

text: str

The text in string.

Returns:

numpy.ndarray

The text embedding extracted by model.

More Resources

- Sentence Transformers for Long-Form Text - Zilliz blog: Deep diving into modern transformer-based embeddings for long-form text.

- OpenAI text-embedding-3-large | Zilliz: Building GenAI applications with text-embedding-3-large model and Zilliz Cloud / Milvus

- The guide to jina-embeddings-v2-base-en | Jina AI: jina-embeddings-v2-base-en: specialized embedding model for English text and long documents; support sequences of up to 8192 tokens

- The guide to text-embedding-3-small | OpenAI: text-embedding-3-small: OpenAIâs small text embedding model optimized for accuracy and efficiency with a lower cost.

- The guide to jina-embeddings-v2-small-en | Jina AI: jina-embeddings-v2-small-en: specialized text embedding model for long English documents; up to 8192 tokens.

|

| 20 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

4 years ago | |

README.md

README.md

|

3.5 KiB

|

1 year ago | |

__init__.py

__init__.py

|

688 B

|

4 years ago | |

longformer.py

longformer.py

|

3.2 KiB

|

3 years ago | |

requirements.txt

requirements.txt

|

55 B

|

4 years ago | |

result.png

result.png

|

5.8 KiB

|

3 years ago | |