copied

Readme

Files and versions

Updated 1 year ago

text-embedding

Text Embedding with Realm

author: Jael Gu

Description

A text embedding operator takes a sentence, paragraph, or document in string as an input and output an embedding vector in ndarray which captures the input's core semantic elements. This operator uses the REALM model, which is a retrieval-augmented language model that firstly retrieves documents from a textual knowledge corpus and then utilizes retrieved documents to process question answering tasks. [1] The original model was proposed in REALM: Retrieval-Augmented Language Model Pre-Training by Kelvin Guu, Kenton Lee, Zora Tung, Panupong Pasupat and Ming-Wei Chang.[2]

References

[1].https://huggingface.co/docs/transformers/model_doc/realm

[2].https://arxiv.org/abs/2002.08909

Code Example

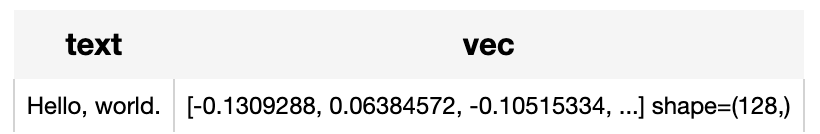

Use the pre-trained model "google/realm-cc-news-pretrained-embedder" to generate a text embedding for the sentence "Hello, world.".

Write the pipeline:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('text')

.map('text', 'vec', ops.text_embedding.realm(model_name="google/realm-cc-news-pretrained-embedder"))

.output('text', 'vec')

)

DataCollection(p('Hello, world.')).show()

Factory Constructor

Create the operator via the following factory method:

text_embedding.transformers(model_name="google/realm-cc-news-pretrained-embedder")

Parameters:

model_name: str

The model name in string. The default value is "google/realm-cc-news-pretrained-embedder".

Supported model name:

- google/realm-cc-news-pretrained-embedder

Interface

The operator takes a piece of text in string as input. It loads tokenizer and pre-trained model using model name and then return text embedding in ndarray.

Parameters:

text: str

The text in string.

Returns:

numpy.ndarray

The text embedding extracted by model.

More Resources

- The guide to text-embedding-ada-002 model | OpenAI: text-embedding-ada-002: OpenAI's legacy text embedding model; average price/performance compared to text-embedding-3-large and text-embedding-3-small.

- Massive Text Embedding Benchmark (MTEB): A standardized way to evaluate text embedding models across a range of tasks and languages, leading to better text embedding models for your app

- The guide to mistral-embed | Mistral AI: mistral-embed: a specialized embedding model for text data with a context window of 8,000 tokens. Optimized for similarity retrieval and RAG applications.

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- Tutorial: Diving into Text Embedding Models | Zilliz Webinar: Register for a free webinar diving into text embedding models in a presentation and tutorial

- The guide to text-embedding-3-small | OpenAI: text-embedding-3-small: OpenAIâs small text embedding model optimized for accuracy and efficiency with a lower cost.

- The guide to voyage-large-2 | Voyage AI: voyage-large-2: general-purpose text embedding model; optimized for retrieval quality; ideal for tasks like summarization, clustering, and classification.

- Training Text Embeddings with Jina AI - Zilliz blog: In a recent talk by Bo Wang, he discussed the creation of Jina text embeddings for modern vector search and RAG systems. He also shared methodologies for training embedding models that effectively encode extensive information, along with guidance o

|

| 20 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

4 years ago | |

README.md

README.md

|

3.9 KiB

|

1 year ago | |

__init__.py

__init__.py

|

668 B

|

4 years ago | |

realm.py

realm.py

|

2.5 KiB

|

4 years ago | |

requirements.txt

requirements.txt

|

55 B

|

4 years ago | |

result.png

result.png

|

5.6 KiB

|

3 years ago | |