copied

Readme

Files and versions

Updated 3 years ago

video-text-embedding

Video-Text Retrieval Embdding with CLIP4Clip

author: Chen Zhang

Description

This operator extracts features for video or text with CLIP4Clip which can generate embeddings for text and video by jointly training a video encoder and text encoder to maximize the cosine similarity.

Code Example

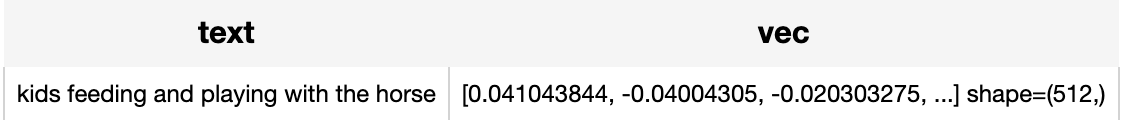

Read the text 'kids feeding and playing with the horse' to generate an text embedding.

from towhee.dc2 import pipe, ops, DataCollection

p = (

pipe.input('text') \

.map('text', 'vec', ops.video_text_embedding.clip4clip(model_name='clip_vit_b32', modality='text', device='cuda:1')) \

.output('text', 'vec')

)

DataCollection(p('kids feeding and playing with the horse')).show()

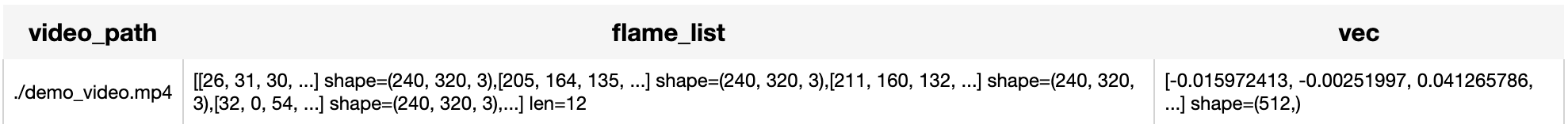

Load an video from path './demo_video.mp4' to generate an video embedding.

from towhee.dc2 import pipe, ops, DataCollection

p = (

pipe.input('video_path') \

.map('video_path', 'flame_gen', ops.video_decode.ffmpeg(sample_type='uniform_temporal_subsample', args={'num_samples': 12})) \

.map('flame_gen', 'flame_list', lambda x: [y for y in x]) \

.map('flame_list', 'vec', ops.video_text_embedding.clip4clip(model_name='clip_vit_b32', modality='video', device='cuda:2')) \

.output('video_path', 'flame_list', 'vec')

)

DataCollection(p('./demo_video.mp4')).show()

Factory Constructor

Create the operator via the following factory method

clip4clip(model_name, modality, weight_path)

Parameters:

model_name: str

The model name of CLIP. Supported model names:

- clip_vit_b32

modality: str

Which modality(video or text) is used to generate the embedding.

weight_path: str

pretrained model weights path.

Interface

An video-text embedding operator takes a list of towhee image or string as input and generate an embedding in ndarray.

Parameters:

data: List[towhee.types.Image] or str

The data (list of image(which is uniform subsampled from a video) or text based on specified modality) to generate embedding.

Returns: numpy.ndarray

The data embedding extracted by model.

|

| 13 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

4 years ago | |

README.md

README.md

|

2.3 KiB

|

3 years ago | |

__init__.py

__init__.py

|

739 B

|

4 years ago | |

clip4clip.py

clip4clip.py

|

4.5 KiB

|

4 years ago | |

demo_video.mp4

demo_video.mp4

|

950 KiB

|

4 years ago | |

pytorch_model.bin.1

pytorch_model.bin.1

|

337 MiB

|

4 years ago | |

requirements.txt

requirements.txt

|

58 B

|

3 years ago | |

text_emb_output.png

text_emb_output.png

|

14 KiB

|

3 years ago | |

video_emb_ouput.png

video_emb_ouput.png

|

31 KiB

|

3 years ago | |