copied

Readme

Files and versions

Updated 1 year ago

image-embedding

Image Embedding with SWAG

author: Jael Gu

Description

An image embedding operator generates a vector given an image. This operator extracts features for image with pretrained SWAG models from Torch Hub. SWAG implements models from the paper Revisiting Weakly Supervised Pre-Training of Visual Perception Models. To achieve higher accuracy in image classification, SWAG uses hashtags to perform weakly supervised learning instead of fully supervised pretraining with image class labels.

Code Example

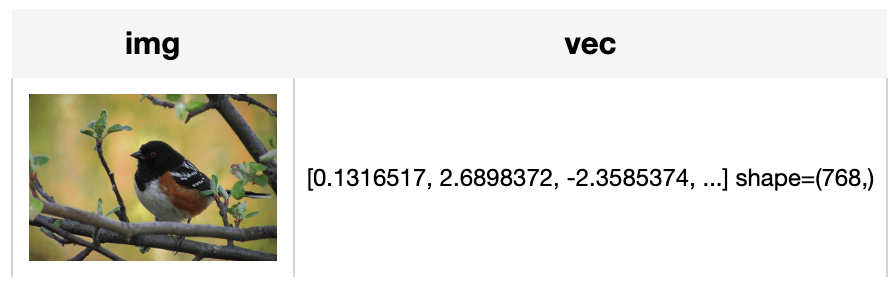

Load an image from path './towhee.jpg' and use the pretrained SWAG model 'vit_b16_in1k' to generate an image embedding.

Write a pipeline with explicit inputs/outputs name specifications:

from towhee import pipe, ops, DataCollection

p = (

pipe.input('path')

.map('path', 'img', ops.image_decode())

.map('img', 'vec', ops.image_embedding.swag(model_name='vit_b16_in1k'))

.output('img', 'vec')

)

DataCollection(p('towhee.jpeg')).show()

Factory Constructor

Create the operator via the following factory method

image_embedding.swag(model_name='vit_b16_in1k', skip_preprocess=False)

Parameters:

model_name: str

The model name in string. The default value is "vit_b16_in1k". Supported model names:

- vit_b16_in1k

- vit_l16_in1k

- vit_h14_in1k

- regnety_16gf_in1k

- regnety_32gf_in1k

- regnety_128gf_in1k

skip_preprocess: bool

The flag to control whether to skip image preprocess. The default value is False. If set to True, it will skip image preprocessing steps (transforms). In this case, input image data must be prepared in advance in order to properly fit the model.

Interface

An image embedding operator takes a towhee image as input. It uses the pre-trained model specified by model name to generate an image embedding in ndarray.

Parameters:

img: towhee.types.Image (a sub-class of numpy.ndarray)

The decoded image data in numpy.ndarray.

Returns: numpy.ndarray

The image embedding extracted by model.

More Resources

- Exploring Multimodal Embeddings with FiftyOne and Milvus - Zilliz blog: This post explored how multimodal embeddings work with Voxel51 and Milvus.

- How to Get the Right Vector Embeddings - Zilliz blog: A comprehensive introduction to vector embeddings and how to generate them with popular open-source models.

- Training Text Embeddings with Jina AI - Zilliz blog: In a recent talk by Bo Wang, he discussed the creation of Jina text embeddings for modern vector search and RAG systems. He also shared methodologies for training embedding models that effectively encode extensive information, along with guidance o

- Supercharged Semantic Similarity Search in Production - Zilliz blog: Building a Blazing Fast, Highly Scalable Text-to-Image Search with CLIP embeddings and Milvus, the most advanced open-source vector database.

- Using Voyage AI's embedding models in Zilliz Cloud Pipelines - Zilliz blog: Assess the effectiveness of a RAG system implemented with various embedding models for code-related tasks.

- Image Embeddings for Enhanced Image Search - Zilliz blog: Image Embeddings are the core of modern computer vision algorithms. Understand their implementation and use cases and explore different image embedding models.

- Enhancing Information Retrieval with Sparse Embeddings | Zilliz Learn - Zilliz blog: Explore the inner workings, advantages, and practical applications of learned sparse embeddings with the Milvus vector database

- An Introduction to Vector Embeddings: What They Are and How to Use Them - Zilliz blog: In this blog post, we will understand the concept of vector embeddings and explore its applications, best practices, and tools for working with embeddings.

|

| 9 Commits | ||

|---|---|---|---|

.gitattributes

.gitattributes

|

1.1 KiB

|

4 years ago | |

README.md

README.md

|

4.5 KiB

|

1 year ago | |

__init__.py

__init__.py

|

664 B

|

4 years ago | |

requirements.txt

requirements.txt

|

59 B

|

4 years ago | |

result.png

result.png

|

80 KiB

|

3 years ago | |

swag.py

swag.py

|

5.3 KiB

|

3 years ago | |

towhee.jpeg

towhee.jpeg

|

49 KiB

|

3 years ago | |